Over 75% of Perforce’s surveyed QA teams identified AI-driven testing as a pivotal component of their strategy for 2025. Yet, actual adoption is still behind, with only 16% reporting that they’ve implemented AI in QA. Why is that so? Along with the fear of missing out on this crucial trend, there is still a great deal of confusion around AI in QA testing.

While QA conferences, tech podcasts, and investor meetings make AI adoption sound like a good thing to do, for many teams and CTOs, it’s far from clear what this means in practice. All that noise around AI creates a smoke-and-mirrors effect, making it hard to understand how to implement AI in testing in a way that brings real value.

At DeviQA, we’ve set out to dispel the most popular misconceptions about AI in QA services and help you avoid wasted budgets, mismatched expectations of QA strategies that overpromise or underdeliver.

Hype and hesitation around AI for QA testing

In recent years, AI has become the must-have talking point in quality assurance discussions. In boardrooms and stand-ups, it's now part of the conversations about scaling QA, reducing testing cycles, or catching bugs earlier.

But alongside that urge comes a fair amount of hesitation. With bold claims from vendors and a lack of best practices, it's no surprise that teams are unsure where to begin or how to measure value.

To move forward with confidence, decision-makers need clarity on what’s possible, what’s practical, and what’s just noise.

Misconception 1: AI is here to replace human testers

There’s a common belief, or fear (if you wish), that bringing AI into QA will replace people. However, the truth is that efficient software testing is impossible without human judgment, no matter how advanced AI-powered tools are.

AI can help with repetitive tasks, pattern recognition, and data analysis. Yet, it doesn’t know your product, your users, or your business priorities the way your team does.

Besides, it’s wrong to think that software testing involves just automated button clicking and following predefined steps. In fact, it demands thinking critically, asking the right questions, and knowing when something just feels off – things that come from people, not algorithms. So, ad-hoc testing services are still in high demand.

You may perceive AI in QA as an autopilot in aviation. While most commercial airliners have an autopilot system, hardly anyone seriously considers the possibility of pilot-free flights.

An autopilot system can precisely follow a flight plan, control speed and altitude, and even execute auto-landing in low-visibility conditions, but it fails to handle takeoff, respond to unexpected situations, or make split-second decisions when something goes wrong. So, autopilot systems are embedded not to replace pilots but to enable them to stay focused, reduce fatigue during long flights, and navigate more precisely..

The same applies to AI-powered testing. Its goal isn’t to push testers out but to equip them with better tools. When people and algorithms work together, testing is faster, smarter, and more comprehensive.

So, will AI replace software testers? No, AI won’t replace you, but someone who knows how to use it efficiently actually might.

Listen to the Devico Breakfast Bar podcast to learn what Adam Sandman, founder and CEO of Inflectra Corporation, thinks about the human role in AI-powered test automation.

Misconception 2: AI-powered QA is plug-and-play

While AI tools immensely streamline the QA process, they don’t work right out of the box. To get real value from them, teams should invest time and effort in setup, configuration, integration, and maintenance.

First, AI-driven testing tools need to be connected to your CI/CD pipeline, and this isn’t always seamless. Compatibility issues with existing infrastructure are rather common. Data pipelines may also need adjustment to support new workflows.

Second, AI in test automation requires well-structured and relevant training data. If your existing test results are noisy or incomplete, the models won’t learn much from them. Be ready to take your time to clean up test histories, properly tag data, and clearly define success criteria that reflect the product’s actual behavior.

Also, you may face a challenge in aligning AI tools with your test case repositories. Considerable fine-tuning is usually required to enable AI systems to understand the logic behind your test suites and, ultimately, identify possible improvements.

Finally, apart from initial legwork, AI in automation testing also demands ongoing maintenance. If left unmanaged, AI models become less accurate over time. Regular monitoring, retraining, and tuning are vital to keep them effective and reliable.

Misconception 3: A QA strategy isn’t compulsory when AI steps into software testing

It’s a big mistake to overestimate the capabilities of AI. AI-driven tools are great at supporting your testing efforts - they can recognize patterns, suggest improvements, and help refine your strategies. Yet, they don’t decide what matters most to your product, what risks to cover, and how to stay aligned with business goals and compliance requirements. Thoughtful planning, broad context, and cross-team communication are required for this. So, having a QA strategy in place is still a must.

Consider a test strategy as your map and AI as your compass. The map tells you where you need to go and what to watch out for. The compass helps you stay on course. Along with these, you still need experienced QA engineers on the wheel to decide when to speed up, slow down, or reroute based on current priorities.

Misconception 4: Only large enterprises can afford AI tools

There is an opinion that, because of its cost and complexity, AI for QA testing is reserved only for large companies with huge teams, massive budgets, and custom infrastructure. This is a myth.

In reality, many AI-driven tools - Owlity is one of them – are now designed to support startups, smaller teams, and even individual testers.

You can find AI-powered testing platforms that offer usage-based pricing models and scale with your actual testing needs. No huge upfront contracts – you pay for what you actually use. There are even open-source options that help teams experiment with AI-enhanced test flows without significant investment.

Also, some AI solutions require minimal setup, which is important for small teams without in-house DevOps to get started. On top of that, many tools are API-first, allowing faster integration into CI/CD pipelines like GitHub Actions, CircleCI, or Jenkins.

AI-powered QA outsourcing services are also quite affordable, especially if you consider hiring an offshore vendor.

In short, AI in QA is no longer a luxury. Whether you’re running a startup or a mid-sized team, you can start small, experiment to find out what fits best, and expand as your needs grow.

Misconception 5: AI-generated test data meets compliance and real-world coverage

While AI-generated test data is undeniably helpful, it doesn’t check all boxes for compliance and comprehensive testing. Here are some nuances you need to know.

In general, AI-generated synthetic data helps organizations bypass privacy risks tied to GDPR, HIPAA, CCPA, and similar regulations. With AI-generated synthetic datasets that have structure and statistical properties like real data, teams avoid the legal pitfalls of handling sensitive information.

However, not all synthetic data is automatically compliant. If an AI model has been trained on real user data without strict controls, there’s a chance sensitive information could leak or be mimicked. And unless teams fully understand how the data is generated and anonymized, they could be exposed to regulatory trouble without realizing it.

In terms of coverage, AI can quickly (1-2 hours only) generate plenty of realistic and varied data for common test scenarios. But it often struggles when it comes to edge cases like unexpected user behavior, location-specific logic, or rare exceptions that only humans with domain knowledge would think to test.

All in all, AI-generated data is of great help, but validation is still needed. Teams in regulated industries, like fintech or healthсare, should treat AI-generated test data as a playground, not a ready-to-use product. Regular checks and expert input are what make AI-generated data reliable and audit-ready.

Misconception 6: AI can tackle exploratory testing

Artificial intelligence is indeed good at handling repetitive testing tasks, but exploratory testing remains a completely human-driven QA activity. The main reason is that it requires human intuition, curiosity, creativity, and critical thinking.

In the course of exploratory testing, testers don’t follow scripts because there are no rules or a plan of action. The goal is to challenge a system by asking what-if questions and figuring out unexpected user behavior. A bit messy, it often helps find the most critical bugs.

AI, in turn, always follows patterns. It can create tests, run them at scale, and even simulate user flows. But it doesn’t wonder what happens if you click a button three times in a row or how users feel about certain interactions. Lacking empathy and user-centric thinking, AI cannot assess usability, accessibility, and many other aspects, due to which the idea of AI usability testing and AI exploratory testing isn’t realistic.

Accept as a given that some testing tasks can be automated, and this isn’t a gap but just the point.

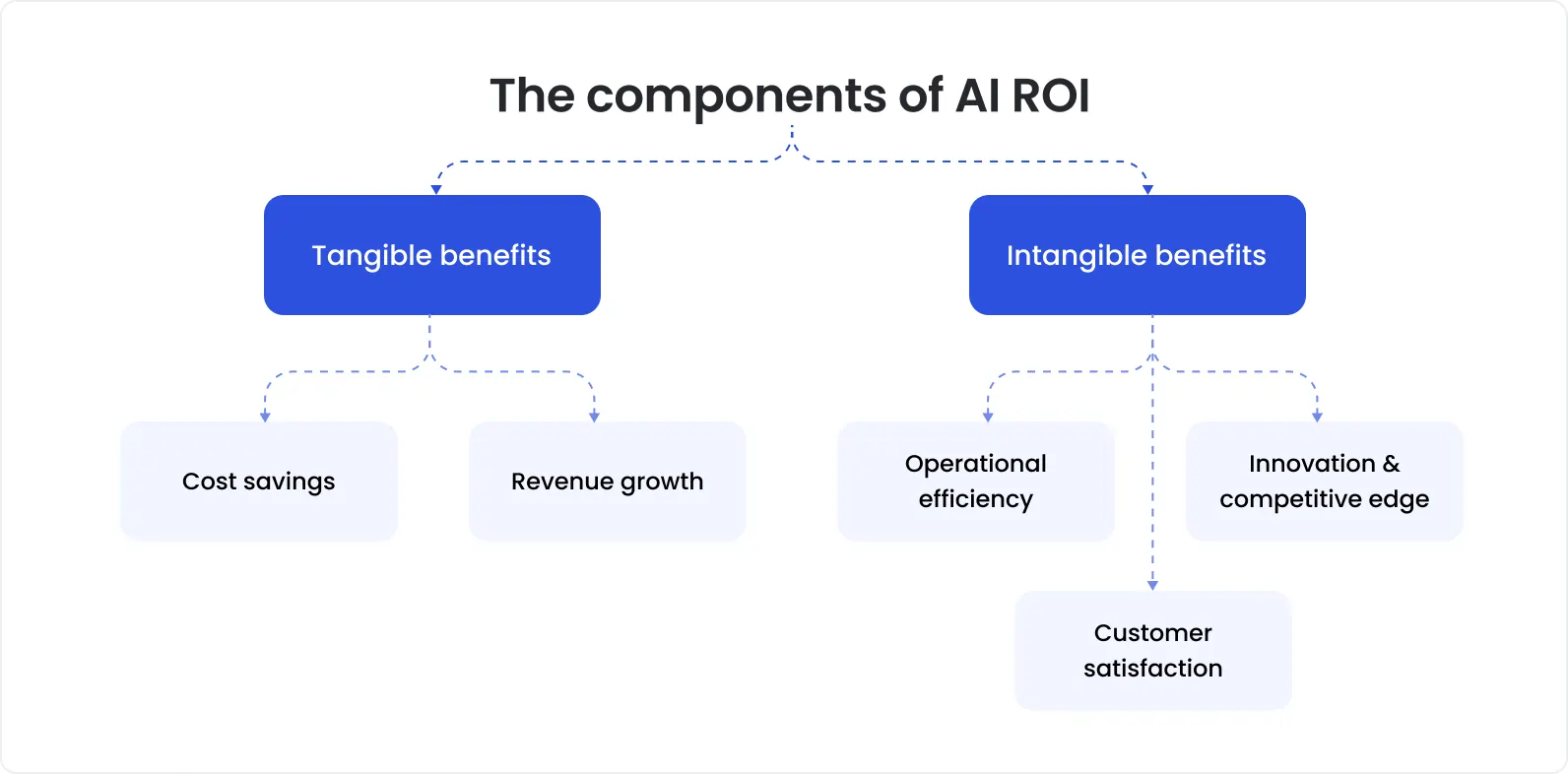

Misconception 7: AI in QA immediately brings high ROI

Getting high ROI right after AI implementation is one more myth. Some teams rush in with this hope and then abandon AI tools too soon, not seeing value immediately.

In reality, achieving tangible ROI usually takes time and strategic implementation - it doesn’t happen instantly. To succeed with AI in QA, teams need to identify high-value use cases, set up infrastructure, adjust workflows, and measure business impact at each implementation stage.

Real benefits of AI in QA, such as reduction in test cycle time, fewer post-release defects, fewer false positives, better test coverage, and smarter resource allocation, contribute to ROI but aren’t gained at once.

43% of TestRail survey respondents said AI helped them significantly improve team productivity and test coverage. So, be patient. AI in QA pays off over time if adopted strategically, with the human-AI collaboration, and ongoing measurement.

Short on time and resources? Teaming up with an experienced QA provider that has a fine-tuned AI infrastructure and workflows in place can help you see value faster.

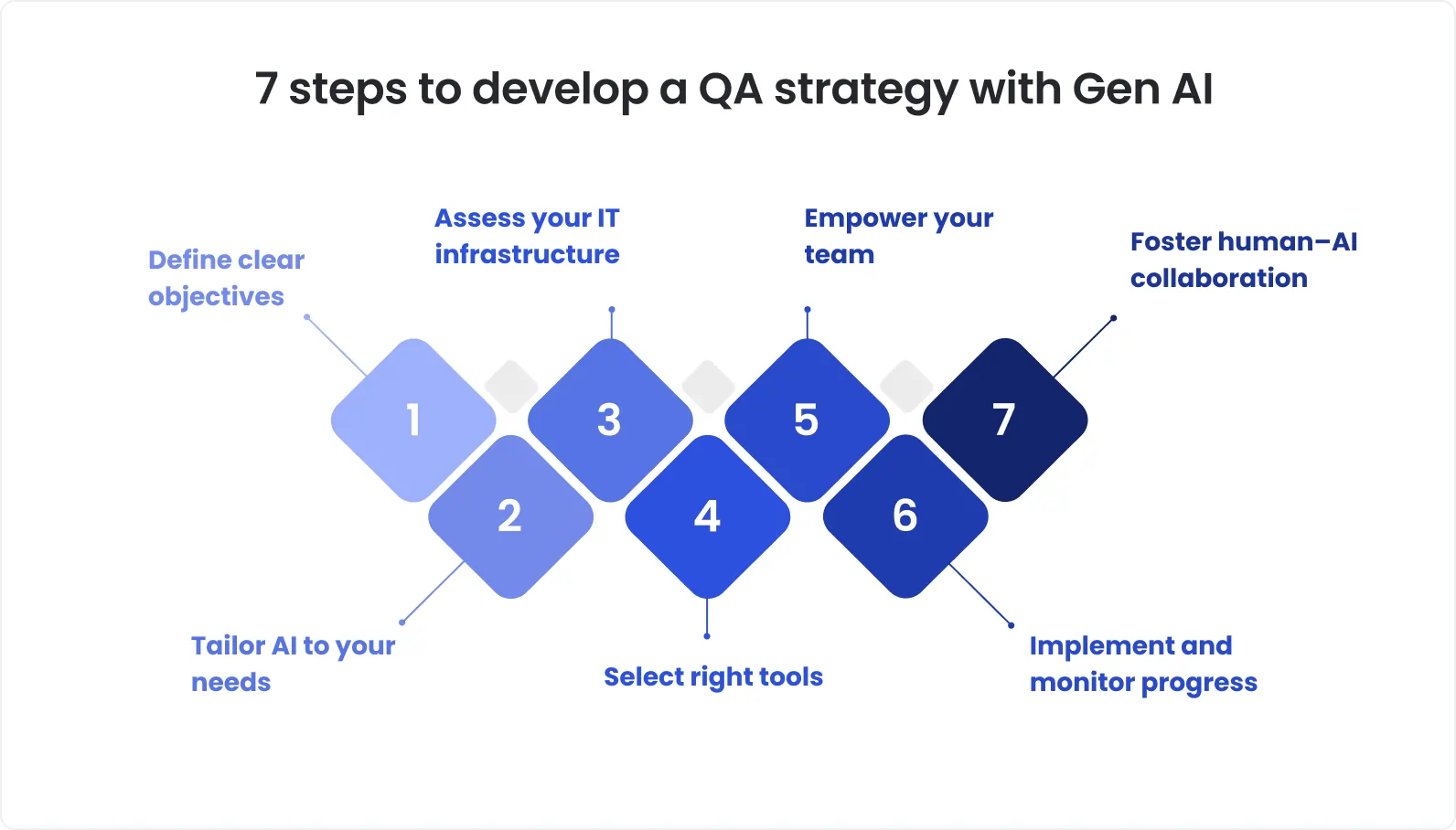

The smart way to implement AI for test automation

Software testing using artificial intelligence isn’t something beneficial by default. It works best when aligned with your actual needs, limits, and workflows.

That’s why, before investing time or budget into AI-driven testing, step back and decide what you need to improve (test coverage, speed, or defect detection) and what constraints you face (a lack of expertise, bandwidth, tooling, etc.). Also, evaluate your current QA process to be sure that it’s in the right shape to move forward with AI-enhanced automation.

If some foundational work still needs to be done, it might be better to partner with expert QA vendors who’ve already built AI-supported workflows from scratch. This will help you skip a trial-and-error phase and get results faster.

DeviQA, with its specialized industry knowledge, flexible engagement models, advanced infrastructure, rigorous recruitment process, and independent, professional execution, can be the right QA partner.

Ready to discuss how AI-powered QA can be implemented for your project? Schedule a short discovery session with our team.