Today, QA leaders are under mounting pressure to ship faster, keep quality airtight, and do it all without blowing the budget. As release cycles shrink and users become more demanding, it becomes harder to balance between quality, speed, and budget.

With this in mind, traditional automated regression testing is starting to hit a ceiling. However, every cloud has a silver lining - a broad adoption of artificial intelligence in software testing offers an efficient solution. Supercharged with AI, functional and regression testing can ensure exceptional quality at high speed, while driving testing costs down and ROI up.

But here’s the catch: just adding AI doesn’t guarantee high ROI. To bring you the desired results, AI automation testing should be implemented wisely - ideally by seasoned QA experts.

Our guide will show you where the ROI levers of AI for QA testing are hidden and how to pull them as far as they can go.

Meaning of ROI in QA testing services

In too many boardrooms, QA is still treated as the ‘necessary expense.’ It’s seen as the activity that slows things down, signs off on releases, and eats budget without directly generating revenue. However, that’s the distorted picture of software testing. When done right, QA is not a cost center but a value multiplier. ROI helps understand this by measuring the value that testing activities deliver compared to the resources spent on them.

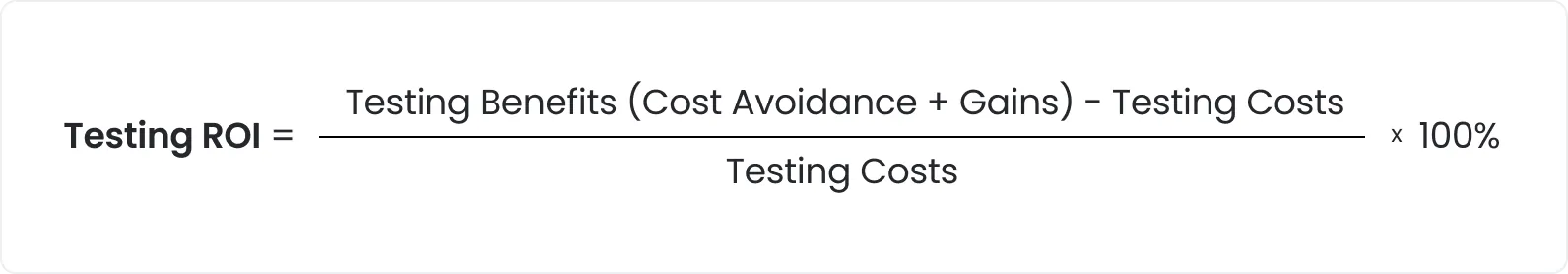

The simplified ROI formula for software testing looks like this:

Testing benefits include:

Faster release cycles (shorter time-to-market)

Fewer bugs in production (lower support and hotfix costs)

Less rework (less time spent on fixing)

Reduced downtime costs

Higher user satisfaction and retention

Testing costs include:

Time (hours spent on performing QA tasks)

Labor (in-house and/or outsourced QA engineers)

Tooling and licenses (test management systems, automation frameworks, cloud test grids)

Test infrastructure (hardware, device farms, environments, VMs, staging servers)

Training and upskilling (workshops, courses, certifications for testers)

How AI changes the ROI math

The introduction of AI-powered automation of regression testing doesn’t go unnoticed, as it impacts the ROI formula on both the benefit and cost sides.

Benefit side:

By automating a lot of tasks and providing predictions, AI-augmented testing drives greater gains:

- Faster test execution and feedback loops

AI automates test script generation, test prioritization, and test result analysis, saving a lot of time. For example, a Singapore-based insurance SaaS company managed to improve testing speed by 54%.

- Reduced maintenance costs

With AI under the hood, self-healing test automation frameworks automatically spot and fix issues in test scripts, reducing maintenance time by 40-60%. No need to say this cuts maintenance costs.

- Fewer post-release defects

AI-powered functional and regression testing ensures better defect detection and prevention. As a result, critical bugs are caught earlier, reducing expensive production fixes and reputational risk. According to IJIRSET, the AI-driven approach can help you reduce the number of bugs reaching production by 30%.

- Resource optimization

With AI-driven QA testing orchestration, teams can get more out of their testing resources by running only the most relevant tests, grouping them efficiently, and executing them in parallel. This cuts down on wasted compute, avoids unnecessary cloud spend, and keeps CI/CD pipelines moving quickly. So you maintain the same level of validation at a lower cost.

Cost side:

While AI helps reduce long-term costs, it does introduce new types of investments:

Tooling and licensing (AI-enabled testing platforms, plugins, or cloud execution environments)

Team enablement (time and resources for training a team to work effectively with AI-powered workflows)

Integration effort (initial setup for AI test selection, model training, and integration with existing CI/CD pipelines)

It’s worth mentioning that while these costs can be significant upfront, they are often amortized over time. So, if AI-driven automated testing is implemented in the right way, it pays off with high ROI.

Main ROI levers in AI-powered functional and regression testing

Adding AI just for the sake of ‘AI in testing’ rarely brings real value. The biggest ROI gains come from addressing the pain points that cost teams the most time, money, and release velocity. Here are the aspects of functional and regression testing where AI can deliver tangible returns:

1. Test case generation

Based on the analysis of project requirements, historical defects, and user behavior patterns, AI can automatically generate test cases. This approach lets teams cover both common scenarios and edge cases with less manual effort. By continuously adding test sets as the application evolves, AI helps maintain thorough coverage.

For example, with our AI-augmented testing services, generating test cases for one feature takes just 1–3 hours instead of 16–24 hours manually.

ROI impact: Reduced manual effort, increased coverage, and prevention of missed scenarios that could cause post-release defects.

2. Test case prioritization

One of the best applications of AI in software test automation is automated test case prioritization. By examining defect history, code changes, and usage patterns, AI identifies which tests to run to cover high-risk software areas first. Automated prioritization ensures early detection of critical failures and eliminates resource wastage on low-risk tests.

Thus, users of Launchable, an ML-powered tool that intelligently selects and prioritizes the most important tests with the highest potential impact, reported a 50% reduction in machine hours, a 90% reduction in test execution times, and a 40% reduction in build times.

ROI impact: Shortened time-to-first-failure, higher testing speed, and reduced wasted compute cycles.

3. Identification of gaps in test coverage

By thoroughly analyzing code complexity, change frequency, and past defect patterns, AI can highlight gaps in test coverage and point out software modules that require more attention. This is very helpful, as it enables teams to focus on areas that historically produce the most bugs while avoiding unnecessary testing in low-risk areas.

An IP Development Director of Renesas said they achieved up to 10x improvement in reducing functional coverage holes by using an AI-driven verification with Synopsys VCS.

ROI impact: Better testing of high-risk areas, which reduces escaped defects, and the elimination of low-risk module over-testing, which saves time and computing resources.

4. Test script maintenance

For a long time, test script maintenance has been one of the most time- and effort-consuming tasks. AI-powered, self-healing testing frameworks (e.g., Healenium for Selenium, Katalon, Applitools, Functionize, etc.) change this. AI detects changes in the UI or DOM and automatically updates corresponding locators, waits, or element references. This is an efficient way to keep tests updated to changes in UIs, APIs, or workflows and retire those that are obsolete.

For example, deployment of a self-healing test automation framework for an e-commerce application reduced the time spent on test maintenance by 70%, allowing the team to focus on more critical tasks.

ROI impact: Reduced maintenance effort and keeping regression suites stable and reliable, which prevents pipeline delays.

5. Flakiness detection and reduction

Test flakiness is a common problem in QA regression testing. With AI adoption, this challenge can be efficiently handled. AI spots intermittent failures by analyzing patterns in test executions and then auto-reruns or quarantines unstable tests. This way, problematic areas that require further investigation are highlighted.

A B2B SaaS platform that migrated 1,500 Selenium tests to Checksum, an AI-powered platform, managed to drop the flaky test rate from 22% to 0.6%.

ROI impact: Stable CI pipelines, reduced false positives, and faster investigation.

6. Root cause analysis

When failures occur, traditional debugging requires testers and developers to manually check logs, trace code changes, and reproduce issues. This approach is usually slow and labor-intensive. AI-powered analysis tools automatically correlate failing test cases with recent code commits, configuration changes, or environmental factors. This way, defect sources are identified much faster.

For instance, a Fortune 500 data management company that adopted Testim’s RCA feature reduced time spent on troubleshooting by 80%.

ROI impact: Shortened investigative overhead and faster defect resolution time.

What conclusion can be made? AI maximizes ROI when applied where the waste is greatest. Now you know what testing aspects to target first.

Measuring ROI from AI-powered functional and regression testing services

Rolling out AI-powered regression or functional testing without measuring its impact is like installing solar panels and never checking your energy bill. If you want to prove value and make early course corrections, you need to track ROI metrics from implementation through post-release.

Based on the stage where a metric is collected, three main groups of metrics can be distinguished:

Pre-release metrics let you understand if a dedicated QA team is getting faster and if busywork is being reduced.

Post-release metrics show how AI QA impacts quality, customer experience, and operational savings after the product is live.

Cumulative metrics are aggregated across releases to see the overall impact.

By tracking all these metrics, you can avoid the common pitfall of focusing only on speed or cost. High ROI is gained when speed, quality, and cost efficiency improve together, and AI makes that possible if you track the right metrics at each stage.

The table below represents key ROI metrics within each group that keep both technical and business stakeholders aligned.

Test cycle time reduction

Percentage of decrease in full regression/functional test execution time

Shows early efficiency gains and helps justify AI investment to stakeholders

Automation coverage improvement

Percentage of test cases automated in high-risk areas

Indicates scalability of testing without proportional labor cost increase

Maintenance effort reduction

Hours spent fixing broken test scripts per sprint/release

Reflects cost savings and capacity gains from self-healing automation

Defect leakage into production

Percentage of defects found in production vs. total found

Indicates real-world product quality and customer impact

Defect detection efficiency (DDE)

Percentage of total defects caught during testing

Shows the ability of AI QA to intercept costly defects before release

Escaped defect severity mix

Severity levels of production defects

Prioritizes prevention of high-impact, high-cost failures

Cost of Quality (CoQ)

Total cost of QA and cost of production defects

Measures the financial efficiency of QA over time

Release velocity impact

Change in release frequency after AI adoption

Demonstrates the contribution of AI to market responsiveness

Total QA labor hours saved

QA hours saved through AI efficiency gains

Converts efficiency into tangible labor cost savings

User experience sentiment analysis

CSAT (Customer Satisfaction) or NPS (Net Promoter Score) changes after the implementation of AI-driven QA

Shows whether AI-driven QA improvements translate into better user experience.

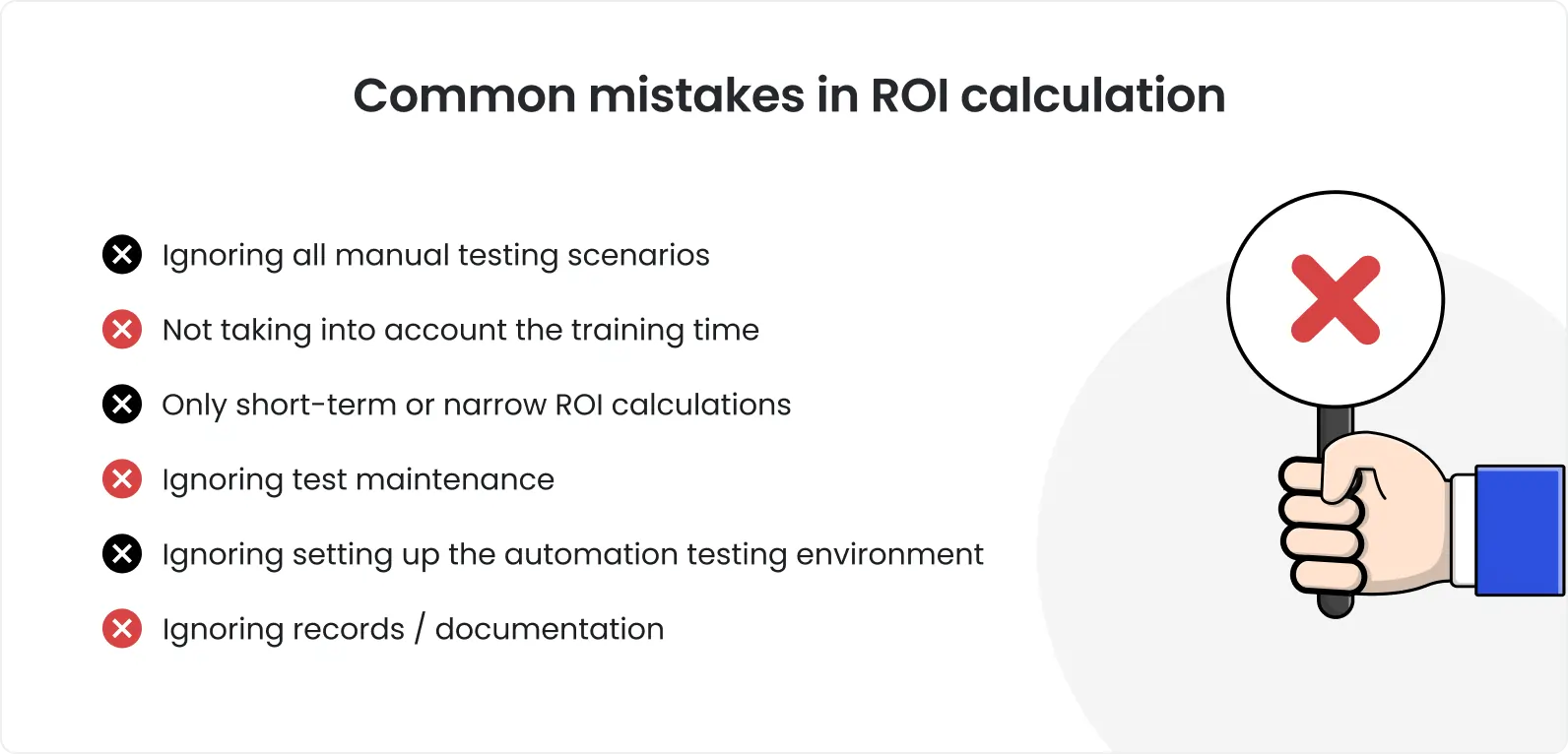

Common pitfalls that undermine ROI from AI-automation

Some QA teams approach AI like a plug-and-play tool, expecting immediate value without adapting their workflows or mindset. As a result, they have bloated costs, missed targets, and leadership questioning the investment. AI-powered functional and regression testing can drive high ROI, but only if it’s implemented with the right strategy.

To gain high ROI from AI-powered functional and regression testing, you need to know the common pitfalls to avoid them before they burn through your budget and credibility.

Here are the main ROI killers.

Over-automating low-value tests

AI makes it easy to automate more tests faster. In reality, not all tests need to be automated. Automating tests that rarely fail, cover stable code, or don’t impact critical workflows is just a waste of compute and maintenance effort.

Why it hurts ROI:

Increased execution time for little quality gain

Higher cloud compute costs

Better approach: Focus AI-powered automation on high-risk, frequently changed, or revenue-critical paths first.

Focusing on UI automation only

Many AI testing initiatives start (and end) with UI because it’s visual, demo-friendly, and easy to show progress. However, limiting automation to the UI layer and neglecting faster, more stable API and service-level testing is inefficient.

Why it hurts ROI:

Higher script breakage rates result in more maintenance hours

Slower test cycles block CI/CD

Better approach: Balance AI-assisted automation across UI, API, and integration layers for faster, more resilient testing.

Using AI tools without adapting workflows

AI brings automated test generation, prioritization, and maintenance. Yet, those gains may never reach production if you haven’t adjusted sprint planning, CI/CD pipeline, review cycles, or triage routines.

Why it hurts ROI:

- Stale workflows block potential speed improvements, making AI initiatives ineffective.

Better approach: Align workflows to leverage AI output to the full extent. For example, feed AI-prioritized results directly into PR reviews or merge blocking rules.

Poor data quality

AI models learn from historical test data, defect logs, and code-to-test mapping. Feed it incomplete or irrelevant data, and it will provide equally flawed recommendations.

Why it hurts ROI:

- Incorrect AI predictions or recommendations lead to missed defects and wasted runs.

Better approach: Implement strong test data management with consistent tagging, clean histories, and updated mappings between code changes and test cases.

Lack of metrics or traceability

Without clear benchmarks and ongoing tracking, there’s no way to prove ROI. AI might be helping, but its contributions become invisible to decision-makers.

Why it hurts ROI: Without evidence, leaders lose confidence, which threatens funding.

Better approach: Before rollout, set ROI-aligned KPIs, such as defect leakage, cycle time, and maintenance effort, and review them every sprint or release.

Roadmap to an ROI-driven QA transformation with AI

Gaining high ROI from AI-powered functional and regression testing QA is a reality. But it doesn’t happen by accident. A well-structured approach where each step is tied to financial outcomes and operational improvements is needed. Here is the roadmap we offer:

1. Audit your current QA ROI

AI introduction requires a prep phase. A clear baseline is what you need for success.

Measure current regression cycle time, automation coverage, defect escape rate, defect severity mix, and total cost of quality (CoQ).

Spot hidden drains, such as overlapping manual efforts, unoptimized test environments, and redundant regression runs that offer little risk coverage.

Outcome: This snapshot will help you measure later gains and justify the AI investment to stakeholders.

2. Identify areas where AI can bring you maximum value

As said above, AI delivers maximum ROI when applied in places where waste is highest or speed is most critical. Target the most time- and effort-consuming tasks and areas where precision is critical.

Your list of areas may include:

Test generation

Test maintenance

Test prioritization

Test data generation

Root cause analysis

Flaky test detection

Outcome: This step ensures you’re investing AI effort where it has the greatest impact.

3. Choose the right QA partner

Rolling out AI without expert guidance always runs the risk of ending up with months of rework and sunk costs.

Partner with a trustworthy provider of AI-driven regression and functional testing services. To find one, pay attention to the following aspects:

Proven case studies in AI-enabled QA

Fine-tuned infrastructure

Ability to integrate with your CI/CD pipelines and existing toolset

Experience in aligning QA metrics with business KPIs

Outcome: An experienced managed testing partner prevents costly missteps and shortens time to value.

4. Set measurable business-aligned goals

It’s a wise move to set financial and operational goals before rollout.

Set ROI-linked KPIs, for example, a 50% reduction of regression cycle time within 6 months or a 30% decrease in escaped defects this quarter.

Align with delivery priorities. Set goals related to faster releases, improved customer experience, and reduced production incidents.

Secure leadership buy-in as a shared vision ensures the QA transformation is supported across departments.

Outcome: Everyone knows what they’re working toward, progress is easier to track, and teams can rally around shared wins that boost both product quality and business results.

5. Start small

A big-bang approach increases risk and dilutes learning. Instead of this, take the following steps:

Pilot first in one product line or testing type.

Gather feedback by tracking efficiency gains, defect detection rates, and tester adoption.

Scale only when the model proves value.

Outcome: You iron out the kinks on a small scale, get quick wins, and know exactly how to roll it out wider without the guesswork.

6. Track and optimize continuously

The first gains are just the start. AI models, prioritization rules, and self-healing logic all need fine-tuning as your product evolves.

Use service-level dashboards to track technical (DDE, leakage, cycle time) and business metrics (cost savings, release velocity).

Review ROI progress every sprint or release, and make adjustments to focus areas as needed.

Evolve with business needs by recalibrating where AI adds the most value as your products change.

Outcome: A continuously improved and tuned AI-driven QA process doesn’t plateau but keeps delivering tangible returns.

Summary: ROI in QA isn’t optional anymore

Software testing and AI are a good combo with huge potential to drive high ROI. However, ROI isn’t just about trimming the budget but also getting your product into users’ hands faster and with fewer hiccups. AI-powered QA makes that possible, provided real experts are engaged in its implementation and maintenance.

At DeviQA, we have such experts, as well as facilities, to help you introduce AI-driven automation testing with maximum value.

Book a consultation with our team to assess your current ROI challenges and explore smarter testing options.