Have you ever faced troubles due to system outages? Because travellers across the major UK airports in May 2024 certainly did, when the nationwide e-gate system went down, causing hours-long queues.

And Taylor Swift fans definitely remember November 2022, when Ticketmaster’s platform buckled under overwhelming demand for The Eras Tour tickets. Even well-designed automated systems can fail under real-world operational stress.

This happens for a number of reasons: software solutions have become more distributed, user demand more volatile, and releases more frequent.

In these conditions, traditional load and stress testing isn’t as efficient as it is supposed to be. Enterprises that want to be confident in the stability of their software products are shifting to a new, AI-based approach to performance testing that lets them predict issues and take corresponding measures before real users are impacted.

With the global AI-enabled testing market size projected to grow from USD 1,010.9 million in 2025 to USD 3,824.0 million by 2032, with a CAGR of 20.9%, it’s clear that AI has moved from a theoretical promise to a real-world application that makes a difference.

If you want your software to be the synonym to reliability, it’s time to embed AI into your performance testing strategy.

The growing importance of predictive performance testing

Load and stress testing is executed to understand how software behaves under pressure. It spots bottlenecks, defines capacity limits, and finds points of failure before they affect the experience of real users. Traditionally, this involved running tests that simulate high traffic or heavy workloads and then analyzing the results to detect issues.

Predictive performance testing has brought in a new philosophy. Instead of detecting problems during or after a test run, it aims to anticipate potential failures before they occur. AI adoption is what has made it happen.

By analyzing huge amounts of telemetry, historical data, and system interactions, AI models recognize even small anomalies and define failure points with a speed and accuracy impossible for traditional manual methods. Predictive software performance testing is on its way to becoming a standard of performance QA.

Benefits of software performance testing with AI:

Better system stability: Using this preventative approach, QA teams intervene earlier, reducing downtime and improving overall system reliability.

Resource optimization: Predictive models can help optimize resource allocation, ensuring efficient use of infrastructure and minimizing costs.

Improved decision-making: By providing insights into potential performance issues, predictive performance testing enables teams to make informed decisions about resource allocation, testing priorities, and overall system design.

Meeting heightened user expectations: Users expect fast loading times, responsive interfaces, and uninterrupted service, especially during peak hours or critical transactions, and predictive performance testing helps to prevent possible issues so that your users stay happy.

With this in mind, predictive performance testing should be treated not just as a nice-to-have practice but as a necessity for companies striving to deliver high-quality digital experiences.

Why traditional software performance testing fails to meet modern requirements

Traditional methods of load and stress testing have been used for years. Yet, nowadays they cannot ensure the desired stability, lacking the ability to predict and fix performance issues in real-time. As a result, system downtime happens now and then. The Uptime survey suggests that each year there are, on average, 10 to 20 high-profile IT outages that cause severe financial loss, business and customer disruption, and reputational loss.

While businesses handle outcomes, QA teams, even mature ones, struggle to tackle blind spots in traditional performance testing. We’d like to highlight the most common pain points of the conventional approach:

Hardcoded test scripts

Test scripts are usually written with fixed parameters. Set user flows, defined input values, and predictable sequences are the norm. Because of this rigidity, it’s quite difficult to simulate the diverse, dynamic, and unpredictable patterns of user behavior seen in real life.

Fragile test scenarios

Most of you know how easily test scenarios can break when application logic, APIs, or UI elements change. Therefore, QA teams spend significant time fixing and maintaining scripts rather than running new tests. This, in turn, slows down release cycles and increases the risk of untested code in production. According to Mabl’s survey report, QA engineers rank test maintenance as the second most time-consuming activity after test planning and case management.

Static test data

Using a fixed set of test data is usual practice in traditional software performance testing. This static data quickly becomes outdated or unrealistic and fails to reflect live conditions, such as seasonal behavior shifts, edge cases, or rare but impactful input combinations, reducing the accuracy of testing results.

Manual root cause analysis

In a traditional environment, when performance tests spot an issue, identifying its root cause is a manual task that requires senior-level expertise. No need to say that this can be slow and error-prone, particularly when it comes to complex systems with multiple dependencies, increasing downtime costs and delaying resolution.

Long feedback loops

As traditional load and stress testing cannot predict possible issues, performance bottlenecks are spotted only after lengthy test runs. Add time for analysis of test results and root causes, and reaction times get extremely slow, allowing performance regressions to linger longer and sometimes slip into production.

Limited scalability for complex, distributed systems

Traditional performance testing tools often have trouble simulating interactions in microservices architectures, cloud-native applications, and systems with numerous third-party integrations. Reproducing realistic communication patterns between hundreds of distributed components is indeed challenging, which is why there are often blind spots in coverage. For enterprise performance testing, this inability to scale effectively is one of the biggest problems.

Lack of real-time monitoring and adaptive testing

Conventional load tests aren’t connected to live system monitoring and cannot adapt to changing system behavior during software testing. As a result, tests can miss transient issues that occur only under specific conditions, reducing the ability to predict failures before they happen.

How AI-enabled performance testing predicts failures

AI adoption is the next stage in the evolution of performance testing. Its great capabilities, particularly in predictive analysis, change the game, making it more dynamic and proactive. Here is what you can expect from AI-enabled software performance testing:

Behavioral load modeling

While the traditional approach is based on assuming traffic patterns and test scenarios, AI-enabled performance testing tools model actual user behavior using production telemetry like click paths, session duration, geographic load distribution, device types, etc. Moreover, the load models update as usage changes. This way, test scenarios mirror reality and don’t get obsolete over time.

Example setup: Apache JMeter with SmartMeter.io AI plugins

Real-time anomaly detection

Forget about combing through test logs after an outage. AI scans logs, metrics, and APM traces in the course of testing, identifying abnormal patterns. Whether it’s unusual CPU spikes, slow API calls, or sudden error bursts, the issues get flagged right away. As a result, QA teams receive earlier warnings and take advantage of the improved signal-to-noise ratio of alerts.

For example, the implementation of AI-powered anomaly detection enabled Payconiq, a fintech startup, to understand how and where applications are acting differently than normal. This led to better payment processing reliability and performance for both consumers and businesses.

Example setup: Elastic’s anomaly detection jobs or Prometheus in combination with Anodot.

System degradation forecast

Not all failures happen suddenly. Most of them creep up. AI can link infrastructure metrics (CPU load, memory usage, disk I/O) with application logs to predict when a system will hit its limit. For example, it can spot a slow memory leak in the middle of load testing and estimate the exact time when it will cause an outage.

Example setup: Facebook Prophet for time-series forecasting or KServe for deploying predictive models into Kubernetes.

Automated root cause analysis

When performance tests detect an issue, finding its cause is often the slowest step. AI can assist by grouping related logs, stack traces, and performance metrics into incident clusters that point to likely causes. This drastically reduces manual effort as well as speeds up issue resolution.

Example setup: Splunk’s Machine Learning Toolkit or Datadog Watchdog.

Adaptive tuning of load profiles

Unlike the traditional approach that sticks to a fixed load pattern, AI-based performance testing spices things up. By leveraging reinforcement learning or optimization models, it adjusts strategies mid-test, ramping virtual user counts, changing pacing, and redistributing load on the fly. This allows QA teams to push system limits and recreate edge cases, pivoting at early signs of instability.

Besides, research on using a reinforcement learning–driven test agent for performance testing shows it can reduce test costs by up to 25% compared to baseline approaches and by 13% compared to random approaches.

Example setup: Azure App Testing or custom AI controllers built into Artillery.io.

Benefits of AI-powered performance testing for businesses

The adaptation of AI-powered performance testing makes a big difference not only for QA teams and CTOs but also for business stakeholders. Businesses benefit from:

1. Fewer system outages

AI-powered software performance testing entails continuous software monitoring, real-time anomaly detection, and even early issue prediction. Thanks to this proactivity, teams can nip problems in the bud, reducing potential downtime and protecting revenue.

2. Cost savings

As long as AI reduces manual efforts, optimizes resource allocation (server power, bandwidth, cloud use, etc.), and predicts performance problems, businesses avoid costly downtime and post-release fixes and cut both operational and testing costs.

3. Data-driven decision-making

The AI-based approach provides valuable insights into application performance, using which stakeholders can make informed decisions on capacity planning, infrastructure investments, and alignment of technical strategies with business objectives.

4. Faster release cycles

Quick analysis of huge data volumes is one of the key strengths of artificial intelligence that helps QA teams provide feedback on application performance much faster. This way, iterations become quicker and release cycles shorter, allowing businesses to react promptly to market demands and remain a frontrunner.

5. Better customer experience

By ensuring smooth app operation under different loads and minimizing post-release defects, AI-driven performance testing ensures an excellent user experience. System reliability fosters customer trust and satisfaction, leading to increased user loyalty and positive brand perception.

6. Alignment with continuous delivery practices

AI-powered performance tests smoothly integrate into continuous delivery pipelines, ensuring thorough evaluation of software performance throughout the development lifecycle. This helps maintain high-quality standards and supports agile development practices.

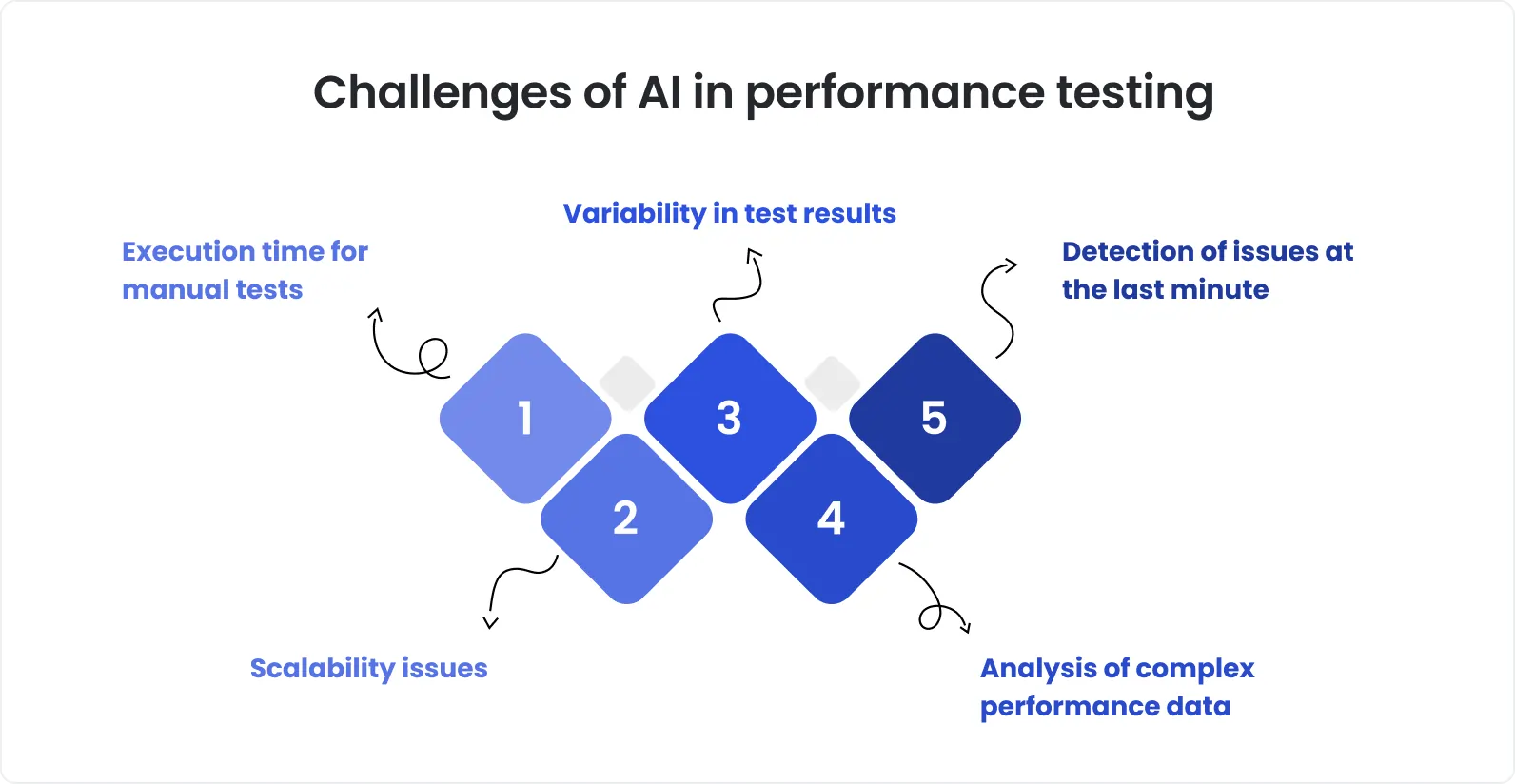

Challenges of using AI for predictive performance testing

AI-powered testing is highly efficient, but it’s important to understand that it isn’t a cure-all. CTOs and QA experts should be aware of its dependencies and trade-offs to set realistic expectations and get maximum value.

1. Constant need for high-quality training data

AI models used in modern performance testing solutions heavily rely on datasets. Yet, many teams have fragmented telemetry data, due to which there are often gaps in the training set for AI. Incomplete or noisy logs and telemetry affect the accuracy of predictions, leading to missed issues or false alerts.

2. Limited interpretability of AI models

Some AI models, especially those using sophisticated algorithms like deep learning, come with black-box operation, providing predictions and recommendations without any explanations. Because of this lack of explainability, QA engineers and decision-makers often find it difficult to trust alerts or act on AI recommendations, particularly in regulated industries.

3. Privacy and security concerns

Predictive AI models sometimes need to access production data to learn realistic usage patterns. Without appropriate anonymization or governance, this can raise privacy or compliance risks, especially when sensitive user data is used. Again, this is unacceptable in healthtech, fintech & banking, and other regulated industries.

4. Possible model drifts

When not regularly retrained to reflect new workloads, software updates, or changed user behaviors, AI models degrade over time, impacting the accuracy of performance testing. Ongoing monitoring and frequent updates are paramount to ensure the reliability of AI predictions.

Why outsource AI-enhanced performance testing services?

Substantial investment, plenty of resources, and months of work are required to build an efficient AI-driven performance testing solution in-house. But do you really need to start from scratch? Outsourcing to a professional QA outsourcining provider like DeviQA is a great way to adopt AI-based performance testing without the burden of creating and maintaining complex systems yourself.

You get immediate access to specialized skills, fine-tuned infrastructure, and well-trained AI models. Besides, you bypass common hassles and pitfalls encountered during AI-based performance testing implementation.

Working with a reputable provider of performance testing services is definitely worth your consideration because of the numerous advantages it brings:

Rich expertise in AI-enhanced performance testing

Access to proven infrastructure and reusable AI models

Insights gained from diverse projects

Faster time to value

Ability to focus on other critical tasks as your QA partner takes full ownership of software performance testing.

Prefer to manage everything in-house? Then, at least check our CTO checklist for AI-enhanced performance QA.

Summary: AI-enhanced performance testing – a leap from reactive to predictive QA

AI-enhanced performance testing gives an opportunity to anticipate issues before they disrupt. With AI solutions in place, QA teams spot troubles while they’re still brewing.

While not replacing tried-and-true performance testing practices, AI complements them with predictive analytics and intelligent test automation. This makes testing more efficient and brings numerous benefits for businesses.

Now that you know the capabilities of AI in performance testing, you can decide which testing strategy best fits your needs. Is predictive performance testing spot-on? Book a QA consultation to learn how the DeviQA team can implement it for your project.