The promise of AI is literally everywhere. It seems like every company, business, and organization out there implements AI into their process. In reality, the MIT report indicates that up to 95% AI pilot projects fail.

From a different perspective, the AI segment is literally booming:

The AI industry is expected to increase in value by around 5 times over the next 5 years.

The AI market experiences a 35.9% compound annual growth rate (CAGR).

As of late 2025, about 97 million people work in the AI space.

The trend is clear. On one hand, AI is here and will be here for a long time. On another hand, companies and businesses speak about AI, yet nobody, well, almost nobody knows how to properly implement it.

The same is true for software testing and the QA space. This report suggests 55% of organizations use AI tools for development and testing. A fraction of QA teams manage to achieve more than 80% test coverage. So, in software testing, there is space to grow, as well as there are actual examples of AI adoption tested, no pun intended, in real scenarios.

In this piece, we focus on those cases to show that using AI in software testing in QA is possible. We explain how to use AI in software testing to make sure it helps and does not become a liability. We explain why a blended approach brings the best results. The overall hypothesis is that Artificial Intelligence in software testing is not a magic wand. And it is also not a threat to testing experts.

AI in software testing. Why now, and why is it all around?

The explosion of AI and software testing is not something that happened by accident. When we dig deeper, we can notice three forces or patterns under the surface:

Driving force #1. Rising project complexity mixed with demand for faster releases

Digitally-savvy users demand increasingly complex products. This translates into microservices, mobile apps, and cloud APIs, multiplying the attack surface. At the same time, release cycles shrink with growing competition. We are now coming from monthly to weekly and even daily cycles.

Naturally, traditional software testing cannot keep up. Evidence shows 46% of software teams report deploying code 50% faster than a year earlier. Besides, 62% of organizations already factor in higher spending on automation.

The hope is: AI is the technology that can meet both growing product complexity and shrinking release cycles.

Driving force #2. Mainstream adoption and investment

Those who want real results go beyond mere experimentation with AI. According to a Capgemini report, 75% of organizations already use AI to optimize their QA processes. Besides, McKinsey indicates that three-quarters of businesses out there use AI in at least one business function. Such a surge is a clear sign that there is pressure to improve quality while cutting costs in both software development and QA spaces.

Naturally, with the growing demand for AI-based optimization, there will be growing investment as well. In such a context, with finances available, the rising number of businesses seeks ways to adopt AI beyond pilots. Yet, not many succeed.

Driving force #3. Growing proof of value

Real enthusiasm must come not from trends, words, and FOMO. It must come from real, measurable results. Those who managed to take AI projects beyond pilots report higher productivity as the main outcome. Numerically, 65% of businesses suggest so. In QA and software testing, successful AI projects showed up to 80% test execution time reduction. Such massive gains come from the automation of repetitive tasks and re-focusing human effort on high-value activities.

For QA and engineering leaders, the message is clear: Ignoring AI is risky. However, adoption must be thoughtful. Hype has bred misconceptions. And not every problem is solvable with algorithms. In most cases, success comes from mixing human effort with machine efficiency, something we will discuss in detail in the next section.

The real AI in software testing possibility. Distinguishing facts from fiction.

AI brings real capabilities to testing, but it is not a panacea. Understanding its strengths and limitations helps you avoid misplaced expectations.

The table below summarizes where software testing using Artificial Intelligence shines and where humans remain essential.

AI works best as a co‑pilot. As CIO Dive reported, more than two‑thirds of IT leaders trust AI‑powered testing tools, but nearly three‑quarters insist on human validation.

Regulators agree: the NIST AI Risk Management Framework stresses that AI should augment, not replace, human judgment. When implementing software testing with AI, keep human testers in the loop for interpretation, risk prioritization, and exploratory testing.

3 ways AI helps software testing and QA teams

Many people fear that AI will replace them in their expert positions. However, the key idea is not to replace human talent, but to improve it. Adopting AI for software testing isn’t about replacing testers. In reality, it is about freeing them from manual and repetitive tasks. As a result, they can focus on more important aspects like strategy and user experience.

Keeping the true value of AI in mind, let’s now take a look at three use cases or proven ways AI can help software testing and QA teams beyond manual tasks and repetition.

Use case #1. Using the power of smart prioritization to deal with the test case bloating

Problem

With thousands of cases piling up over years of development, the test suits will inevitably balloon. In such a context, testers might lose valuable time dealing with obsolete paths and low-risk functions. This slow feedback wastes resources.

As we indicated before, only 14% of teams achieve over 80% test coverage. Besides, test maintenance often swallows 20% of team time. In the end, such a bloat makes it hard to deliver fast, reliable releases.

How AI helps

Analyzing change impact. AI tools inspect code changes, commit histories, and dependency graphs. They predict which areas of the codebase are most at risk and propose the minimal set of tests needed to validate them.

Prioritizing by risk. Models rank test cases based on the likelihood of failure and business impact. Lower‑value tests run less frequently, while high‑risk paths get more attention.

Eliminating redundant tests. AI can detect overlapping coverage and consolidate similar cases. It can also auto‑generate tests for untested paths, ensuring coverage stays comprehensive without manual additions.

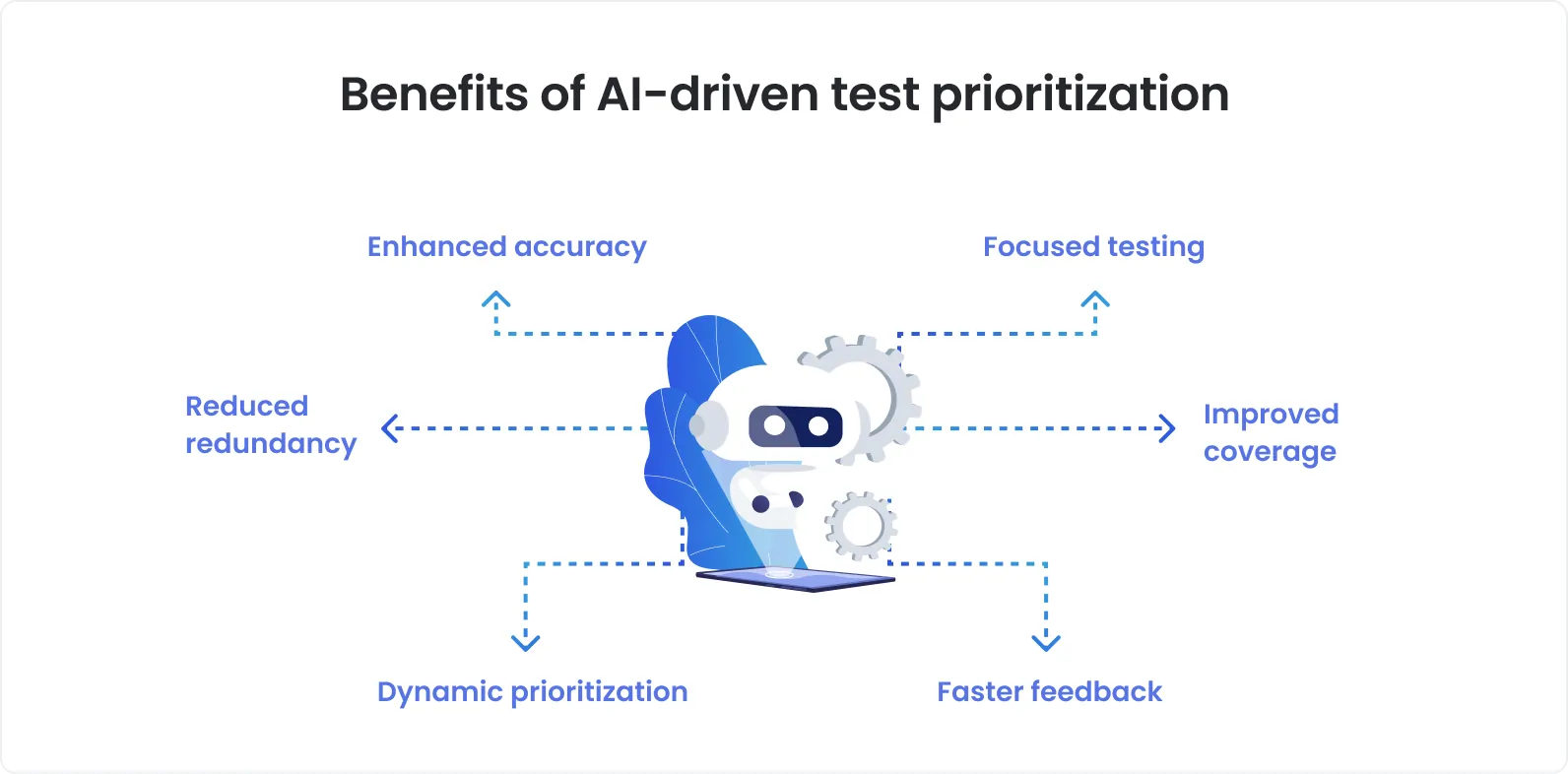

Benefits

Software testing and QA teams can use AI test selection to get measurable efficiency gains. Our AI testing experts indicate that planning and designing tests manually may take up to 16 hours per feature. AI can reduce that time to 2 hours. What is more, test case creation drops from almost 24 hours to 1-3 hours tops.

By running only necessary tests, teams get faster feedback and fewer false positives.

Wrap‑up

Smart prioritization is much more than just cutting run times. It will improve the team’s morale. You’ll see engineers spending less time waiting for builds and having more time to deal with important release features. AI in software testing is about having quality and speed working together.

Use case #2. Detecting and eliminating bugs before they have an adverse effect on user experience.

Problem

In reality, testing teams know that many defects and bugs can slip past unit and integration tests. Inevitably, they will later surface in production and have an adverse effect on end users. Reacting to the defects in production and post-production is costly. It can mean additional resources, late nights, and eroded trust.

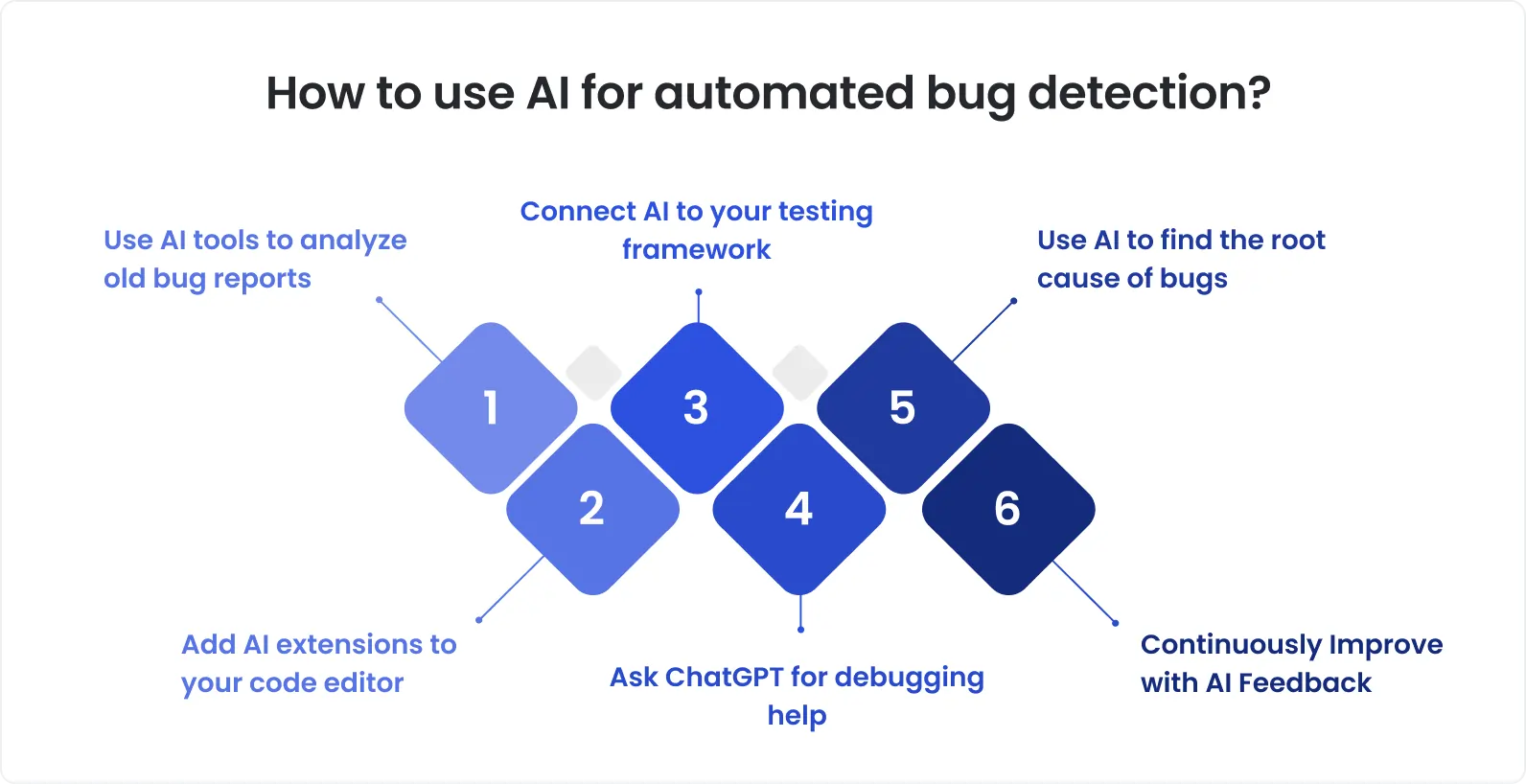

How AI helps

AI shifts testing left by predicting where failures will occur and enabling targeted prevention.

Learning from history. Models ingest historical defect data, test results, and production incidents. They identify patterns, such as modules that have produced many bugs or code changes that often introduce regressions.

Forecasting risk hotspots. By correlating code metrics (like complexity and churn) with past failures, AI forecasts which files or components are likely to break in the next release.

Suggesting proactive tests. Based on predictions, AI recommends new tests or areas for manual exploration. Testers can focus on these hotspots instead of combing through the entire system.

Benefits

Predictive QA transforms firefighting into prevention. In the Techreviewer survey, 82% of respondents reported at least a 20% productivity boost, and 25% enjoyed improvements of over 50% after adopting AI for tasks like defect prediction. These gains come from catching issues early, before they cascade through the pipeline.

Banking leaders see similar advantages: Deloitte predicts that AI could add $2 trillion in profits to the global banking industry by 2028, partly by enhancing software reliability. When bugs never reach customers, both revenue and customer satisfaction rise.

Wrap‑up

Predictive QA complements, rather than replaces, human judgment. Testers still decide which areas need exploratory attention. But with AI flagging the riskiest spots, teams allocate resources wisely. The result is fewer urgent hotfixes and more time for innovation.

Use case #3. Getting the most out of the dev time by fixing flaky tests

Problem

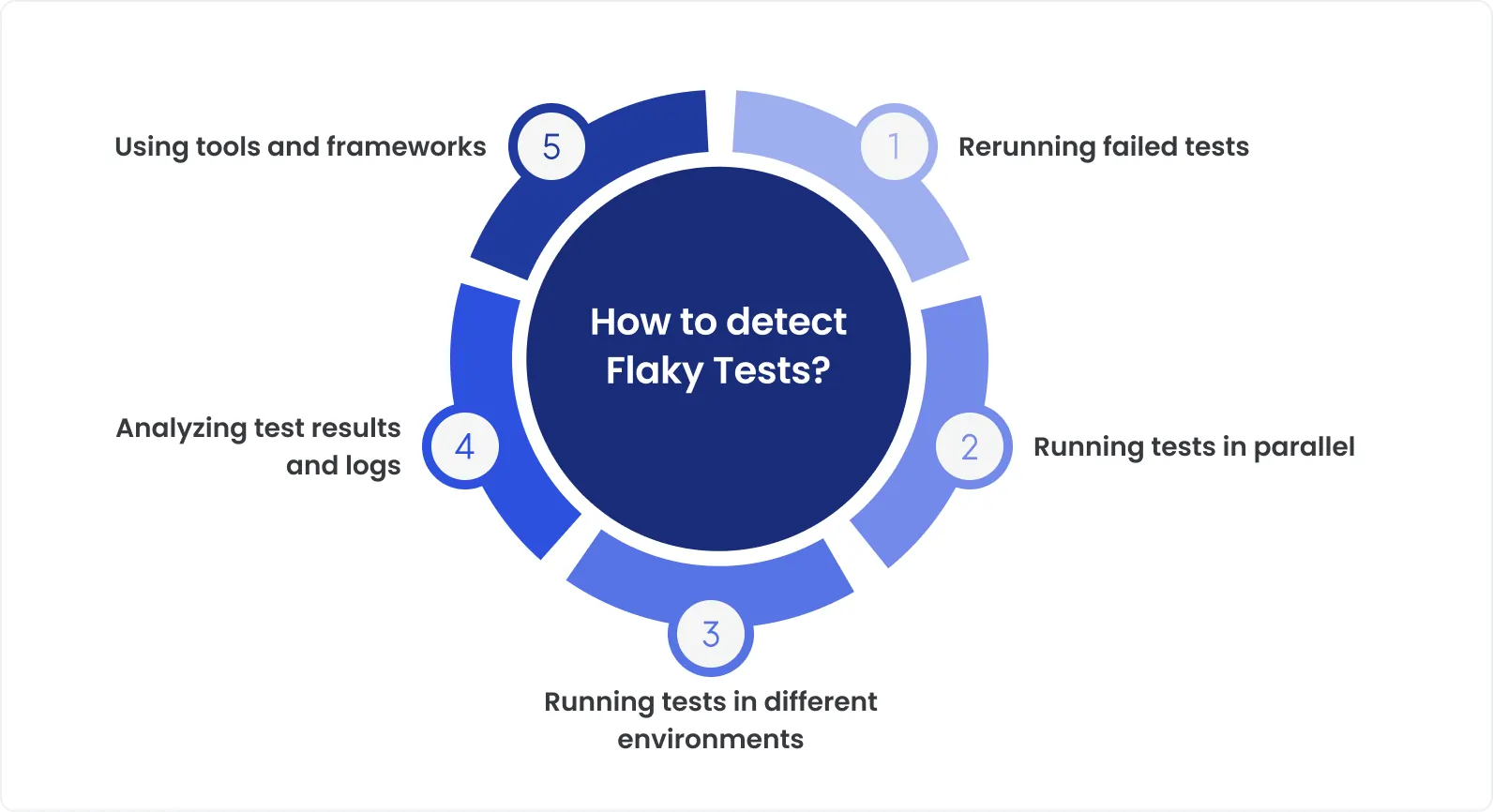

Automated tests sometimes fail for reasons unrelated to code quality, timing issues, changed element locators, or environment glitches. These flaky tests cause false alarms and erode trust in automation. Developers waste hours debugging tests instead of writing features.

How AI helps

Flakiness detection. AI monitors test runs and learns patterns of intermittent failures. It distinguishes between genuine defects and environment‑related glitches.

Self‑healing scripts. When an application’s UI changes (e.g., a button moves), AI updates the test scripts by finding the new element location.

Root cause analysis. Models analyze logs and signals to suggest why a test failed. This enables targeted fixes rather than guesswork.

Benefits

Self‑healing reduces the maintenance burden that often hinders test automation. DeviQA’s AI testing solution includes self‑healing features that adjust tests automatically. Maintenance tasks that used to consume 8–10 hours per feature now take around 4 hours.

Moreover, by filtering out flaky tests, developers avoid false alarms and get reliable feedback. A separate trend noted in the Test Guild survey is that 56% of teams are actively investigating AI adoption, partly to handle issues like flaky tests. The reason is clear: Stable automation pipelines accelerate releases and reduce developer frustration.

Wrap‑up

AI cannot eliminate every flaky test, but it transforms them from a chronic pain into a manageable nuisance. By fixing scripts and isolating environment issues, AI lets teams focus on real quality problems.

Key factors to consider for an AI-augmented testing partner

Building an AI‑enabled testing capability is not trivial. When might you benefit from partnering with a provider who already has AI integrated into its process?

Consider these situations:

Time‑to‑value matters. You need results quickly. Implementing AI yourself may require months of experimentation. A partner like DeviQA offers ready‑made frameworks that plug into your pipelines and deliver value from day one.

Your team is stretched thin. If developers and QA engineers spend more time maintaining test scripts than writing code, adding AI may feel like extra work. Outsourcing AI‑powered testing frees your team to focus on core product work while specialists handle the underlying models and infrastructure

Scaling is your priority. Rapid growth means more applications, microservices, and user journeys to test. A partner with a scalable AI platform can expand coverage and keep pace with your release cadence without linear cost increases.

Lack of specialized skills. Data scientists, ML engineers, and QA experts with AI experience are hard to find. A provider brings cross‑functional talent and proven methods, reducing the risk of missteps.

Security and compliance concerns. In regulated industries (finance, healthcare, etc.), misconfiguring AI tools can expose sensitive data. Professional partners implement proper governance and adhere to frameworks like the NIST AI RMF and ISO standards.

A credible partner should also respect your existing workflows rather than forcing wholesale replacement.

Why teams choose DeviQA for AI-ready QA

If you want results fast without rebuilding your QA stack, DeviQA brings a proven mix of people, process, and AI. Our team has 16 years in the field, more than 600k man-days delivered, and a 96% client retention rate. That track record matters when you need reliable outcomes, not experiments.

What you get in practice:

Domain fluency in fintech, healthcare, and IoT, so tests map to real risk, not just tool output.

Independent execution. Our teams operate with minimal oversight and keep momentum.

Strong talent bar. Engineers are vetted and referenced, backed by a 4k+ expert community.

Ongoing R&D and tooling investment, so you benefit from modern AI workflows without building them yourself.

Flexible ways to work: QA outsourcing, managed testing, QA consulting, QA-as-a-Service, or a dedicated QA team.

Value-added services are included to raise visibility and reduce blind spots across your stack.

DeviQA helps you apply software testing using Artificial Intelligence where it pays off, while senior engineers cover the judgment calls. If you’re ready to cut cycle time and raise confidence without retooling alone, let’s talk.

Final thoughts

AI’s role in quality assurance is poised to grow, but success depends on how it’s applied. Here are the core takeaways:

AI adoption is accelerating. A majority of organizations already use AI somewhere in their businesses. Yet many still struggle with test coverage and maintenance.

AI excels at the tedious stuff. It generates and prioritizes test cases, predicts defect hotspots, and self‑heals scripts. These strengths lead to shorter cycles and lower costs.

Humans remain critical. AI can’t understand nuanced business logic or interpret regulatory requirements. Human testers catch creative attack paths, interpret results, and provide context. Most IT leaders insist on human validation alongside AI.

A hybrid approach wins. Organizations that mix AI tools with human expertise see the biggest productivity gains. They detect issues sooner and scale confidently.

Avoid the trap of expecting AI to replace skilled testers, and don’t ignore AI for fear of automation. Instead, treat AI as a powerful assistant.

Start small with targeted use cases like test prioritization or flaky test healing. Expand gradually as you learn. When in doubt, consult experts.