ChatGPT-5 is still fresh from the printing press. Now, even more than ever, everyone is talking about AI. In their keynote speech, Google mentioned the word “AI” a whopping 121 times.

There are dozens of articles promising that AI‐driven security will solve all problems. Executives and decision-makers are under pressure to modernize overnight. Statistics fuel the hype:

McKinsey’s 2025 global AI survey found that more than three-quarters of organisations now use AI in at least one business function.

Nearly three out of four cyber‑buyers are willing to invest in AI‑enabled security tools. At the same time, data breaches are getting more expensive and more common.

The Ponemon Institute’s 2024 Cost of a Data Breach report estimated the global average at $4.88 million, a 10% yearly increase.

Two‑thirds of surveyed companies used AI in security operations, and those using it extensively reported average cost savings of about $2.2 million.

Those numbers are impressive. However, they can also be misleading. The real question for CTOs, engineering leads, and QA managers isn’t which approach is “better,” it’s how to combine AI and traditional testing to protect large, complex estates without blowing the budget. Human‑led testing is not obsolete. The smartest QA strategy blends both – automation for scale and humans for strategy and context.

Why do the best testing approaches still rely on both machine and human intelligence?

Penetration testing has two core approaches:

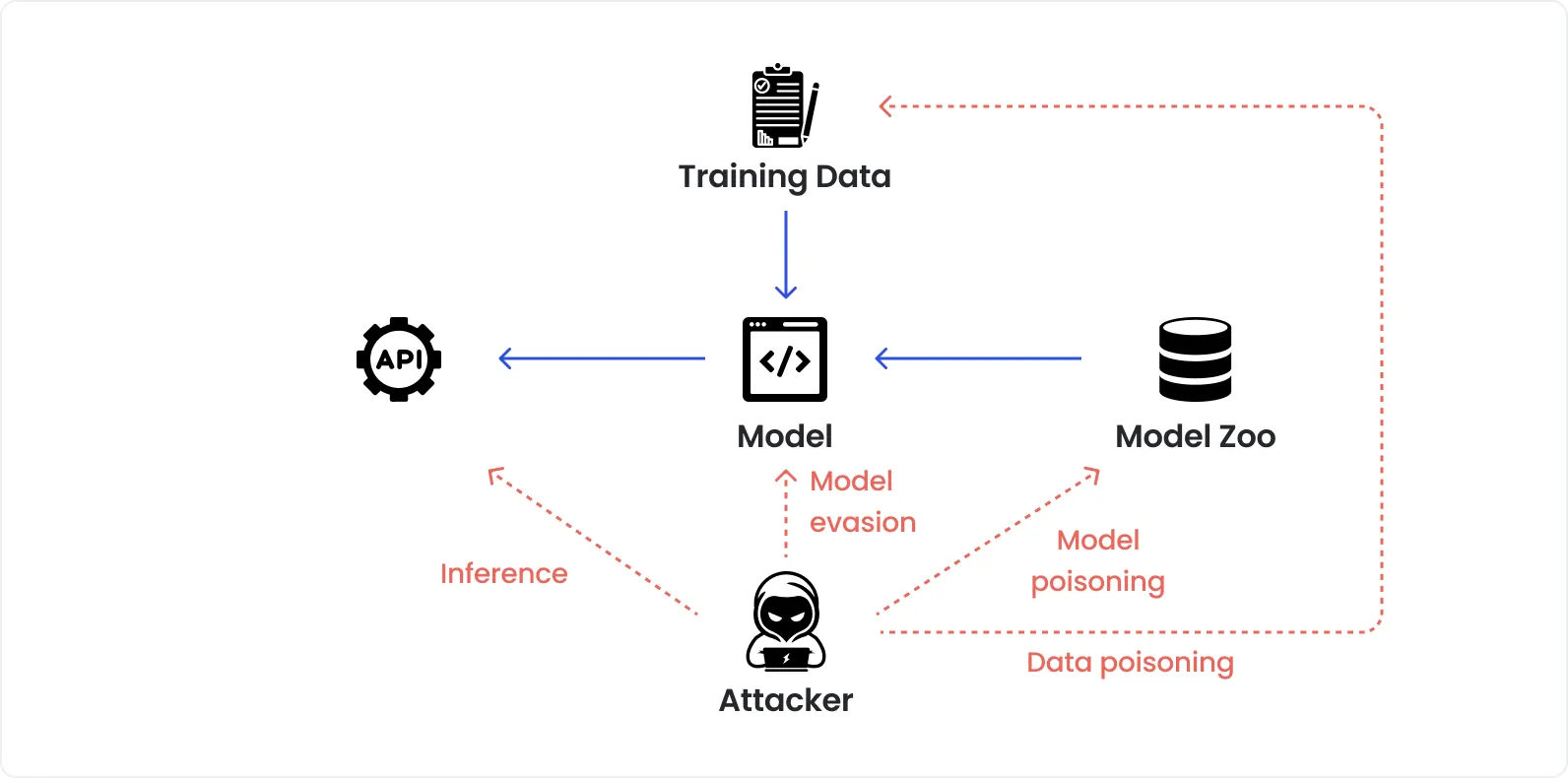

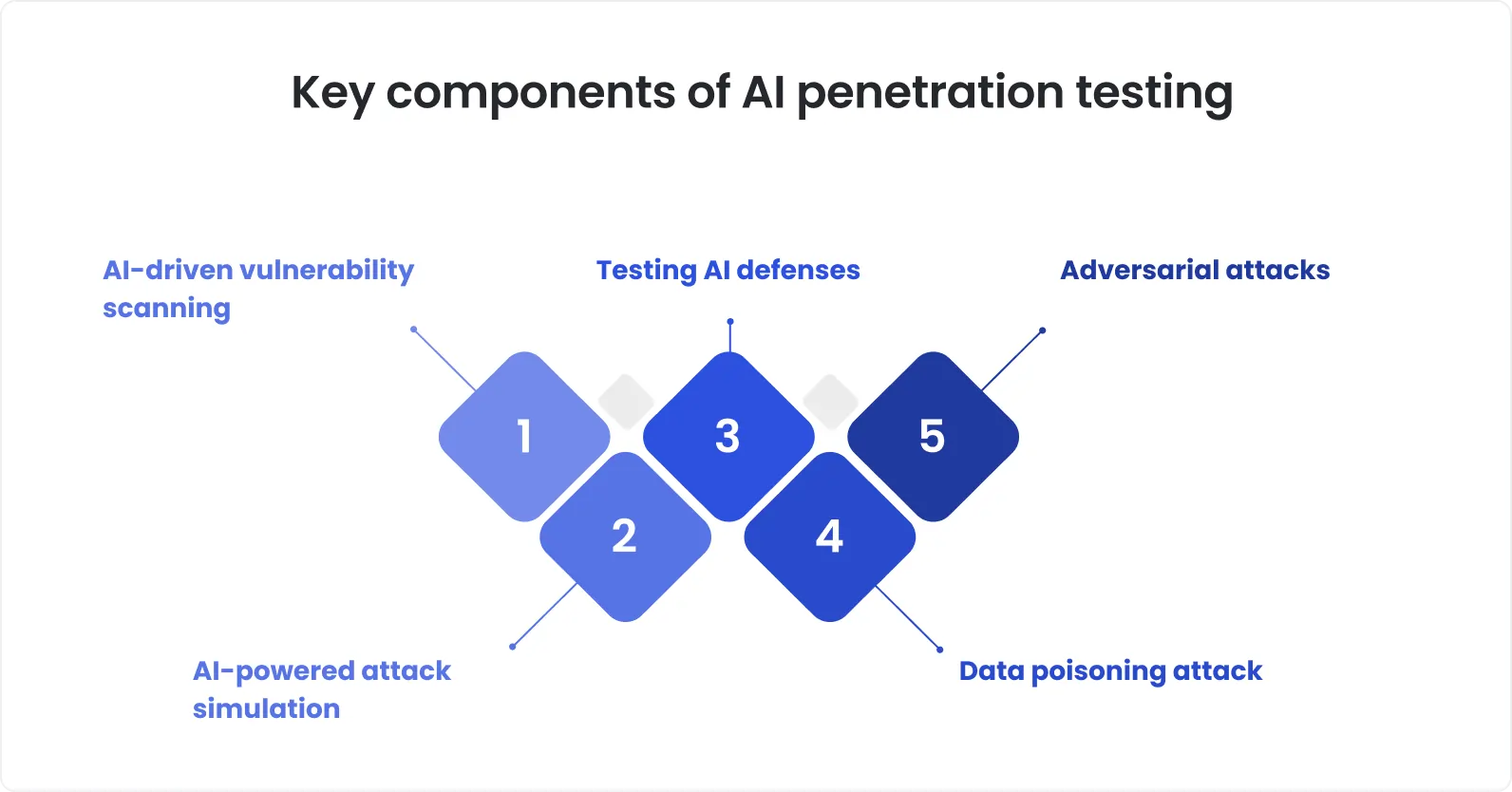

AI‑assisted or automated testing. This approach uses tools to scan code and systems for known vulnerabilities and patterns. Models generate and execute tests at machine speed and update as the codebase changes. These tools excel at breadth and repetition; they never tire or get bored. However, they lack situational awareness. Even the best AI-powered penetration testing still needs someone to decide which risks matter and why. The NIST AI Risk Management Framework stresses that AI systems should augment, not replace, human decision‑making.

Traditional human‑led testing. This approach relies on skilled ethical hackers who think like attackers. They chain vulnerabilities across systems, explore business logic, social engineering, and process flaws that no scanner can predict. It’s messy, creative work that draws on experience. Verizon’s 2024 Data Breach Investigations Report notes that 68% of breaches involve a “human element” – people falling for phishing or making errors. Understanding these behaviours and simulating them is a human job.

Combining both approaches gives you the best of both worlds: machines for coverage and humans for context. A skilled consultant can do the following:

interpret AI output;

prioritise findings by business impact;

design targeted follow‑up tests.

This blend is exactly what the NIST framework calls for: clear governance, risk mapping, and documented human oversight. Ignoring one or the other leads to blind spots. In such a case, leaning on both lets you scale without losing strategic alignment.

Perhaps, that is why AI goes beyond pentesting and is currently changing regression testing as well.

How is AI pen testing different from traditional pen testing? Key points to keep in mind.

In short, there are several core differences between AI pentesting and traditional pentesting. Let’s start from the latter.

Traditional penetration testing

It is scenario‑based and context‑aware.

Testers perform reconnaissance, exploit weaknesses, and chain them together to simulate real attacks.

They handle social engineering, misconfiguration, flawed business logic, and subtle issues such as Broken Object Level Authorization on APIs.

They also interpret findings in light of regulatory requirements and business processes, something a script can’t do.

AI‑powered penetration testing

It is continuous and automated.

AI penetration testing excels at scanning thousands of endpoints, reviewing code and dependencies, fuzzing inputs, and generating variations.

AI also speeds up test cycles. For instance, our AI testing ecosystem reduces test planning and creation from days to hours. That makes it ideal for high‑frequency releases and sprawling microservices.

Yet the goals and contexts differ.

First and foremost, AI scans produce lists of potential issues while humans must filter false positives and assess severity.

Second, AI tools operate on patterns they’ve seen before, while testers discover novel attack chains. Many human‑centred attack vectors, phishing campaigns, insider threats, and supply‑chain compromises fall outside of automated scanners. That’s why the 2024 DBIR still attributes most breaches to credential theft and human error.

So, while the methods share the same end goal (finding and fixing vulnerabilities), their strengths and limitations differ markedly. Keep in mind, choosing the wrong approach can be costly.

The power of AI is in fast scaling. Strategizing its weak point

Why is AI so attractive?

Because it scales. The attack surface is exploding: McKinsey notes that organisations spent around $200 billion on cybersecurity products in 2024 and that the market will grow 12.4% annually. Phishing attacks have risen by 1,265% since generative AI became mainstream. It simply isn’t feasible to manually test every web app, API, cloud resource, and third‑party integration on a continuous basis.

Automated tools tackle this scale. They track changes in infrastructure, generate tests, steer fuzzers towards promising inputs, and cluster similar findings. Companies that use AI and automation extensively save about $2.2 million per breach and can reduce average breach lifecycles by days.

Example from DeviQA:

We have an AI ecosystem that does the following:

auto‑generates test cases from your app structure;

prioritises execution based on real‑time data;

predicts defect hotspots before they manifest;

conducts self‑heals tests when the application changes.

With such an abundance of tools for optimization, AI pentesting becomes easier, requires less manual input, and is less prone to error.

However, it is crucial to keep in mind that AI cannot create a security strategy. It can’t decide which systems are critical to your revenue, which regulatory obligations apply or how to align testing with your threat model. McKinsey points out that while response times have improved, it still takes an average of 73 days to contain an incident mckinsey.com. Tools help, but they don’t tell you where to start or what to prioritise. That’s the domain of experienced QA consultants. They map risks to business objectives, choose the right mix of tests, and adapt to emerging threats and regulations.

Pro tip: Use AI to watch the entire surface and generate noise. At the same time, have experts triage the noise. This combination prevents alert fatigue and ensures that scarce engineering time is spent fixing the most important issues.

Traditional pentesting reveals what AI pen testing misses

Human testers are invaluable for creative threat simulation. They think like adversaries, chain seemingly insignificant flaws into serious exploits and adapt their techniques to the business context.

The Verizon DBIR shows that social engineering and credential theft still cause the majority of breaches. Automated scanners do not pick up on these subtleties. For example, a tester might discover that an e‑commerce platform allows gift card balances to go negative and chain that with a session fixation flaw to drain accounts. Or they might craft phishing campaigns that bypass MFA, something a scanner won’t attempt.

The role of human creativity in pentesting is underappreciated

Creativity also matters when dealing with new types of attacks. Generative AI has turbo‑charged phishing. As we mentioned before, there is a 1200% increase in phishing attacks. Most likely, with new voices and AI agents becoming better and better, we will see cybersecurity threats of unprecedented complexity.

In the context mentioned above, only human testers can design and execute social engineering campaigns that mirror these evolving tactics. Similarly, complex business logic vulnerabilities, like manipulating pricing rules or workflow approvals, are discovered by understanding the business, not by running pattern‑matching scripts.

AI pentesting definitely wins in speed and coverage

Machines, however, win on speed. They run thousands of checks in minutes, never get bored, and catch many routine issues before they reach production. Automated scanning is perfect for continuous deployment pipelines where each commit should trigger tests. AI can also find patterns that humans miss. For example, combining static analysis with models helps detect vulnerabilities in code dependencies and cut false positives.

There are also big industry trends. Gartner predicts that agentic AI will be embedded in 33% of enterprise software applications by 2028, up from less than 1% in 2024. The IEEE survey listed AI as the most important technology for the second year in a row.

Clearly, test automation is here to stay. Neglecting it means falling behind, especially for companies with dozens or hundreds of apps to secure.

DeviQA advice

Use automated tools for broad coverage and to catch regressions quickly. Then invest your human testing time where it counts – critical workflows, integration points and areas with high business impact.

Read our articles like the CTO checklist for AI‑enhanced performance QA and the Guide to maximizing ROI with AI functional testing and regression testing for practical tips.

The power of true scaling and efficiency is in hybrid thinking

When you need to secure hundreds or thousands of assets, neither pure AI nor pure manual testing suffices. The attack surface changes hourly; new features and services appear; dependencies are updated. To keep up, you need automated penetration testing for breadth and human penetration testing for depth. This is why we, at DeviQA, advocate a hybrid model.

A hybrid approach uses automation to continuously discover assets, run baseline scans, and retest after every change. It uses AI to prioritise results, dedupe findings, and surface patterns.

Meanwhile, experienced testers focus on threat modelling, business logic abuse, complex chaining, and social engineering. They validate AI‑found issues, perform manual proof‑of‑concept exploitation, and map findings to compliance frameworks like GDPR or PCI DSS. They also provide clear remediation guidance and track fix verification, tasks that require judgement.

Implementing a hybrid programme can follow a simple structure:

Scope and map. Inventory all applications, APIs, and cloud assets. Tag systems by business criticality and regulatory requirements.

Baseline with automation. Run continuous scans and AI‑driven static analysis. Integrate scanning into your CI/CD pipelines. Use AI to generate targeted test cases for new endpoints.

Manual deep dives. Schedule periodic manual testing focused on high‑impact areas: authentication, authorization, payment flows, data storage, and integration points. Include social engineering and process reviews.

Remediation and retesting – Use AI to cluster findings and prioritise by impact. Feed deduped tickets to engineering, retest fixes, and close the loop.

Continuous improvement – Regularly adjust scope, update threat models, and review AI configuration. Follow frameworks such as the NIST AI RMF to maintain clear governance and human oversight.

Pro tip: When designing your hybrid programme, align testing frequencies with your release cadence and risk tolerance. High‑risk, high‑value systems might need weekly manual reviews in addition to automated checks. Lower‑risk assets can rely on automated scans with periodic sampling.

Our piece on how to implement AI into the QA process without breaking what works offers more guidance.

Conclusion

Neither AI nor traditional penetration testing services alone solves the scale problem.

Automated tools provide unmatched speed and coverage and can reduce breach costs by millions.

Human testers bring creativity, context, and strategic judgement, uncovering vulnerabilities that machines miss

The most effective approach is hybrid: use AI for breadth, and let experts drive strategy and depth.

If you’re still weighing AI penetration testing vs traditional software testing, remember that they all depend on context and expertise. Machines extend reach, humans make the hard calls. Don’t let marketing jargon convince you otherwise.

To explore how a hybrid approach can improve your security posture and support secure scaling, book a QA consultation. We’ll help you design a testing programme that fits your environment, pace, and business. Our experts will also show you how to leverage AI without losing sight of the human element.