The rise of cloud computing and the wide adoption of DevOps demand a more efficient approach to performance testing to ensure optimal user experience and prevent costly downtime.

The traditional method with manual QA testing, static test scenarios, and post-deployment bottleneck discovery may have worked well in the past, but today it often cracks under pressure.

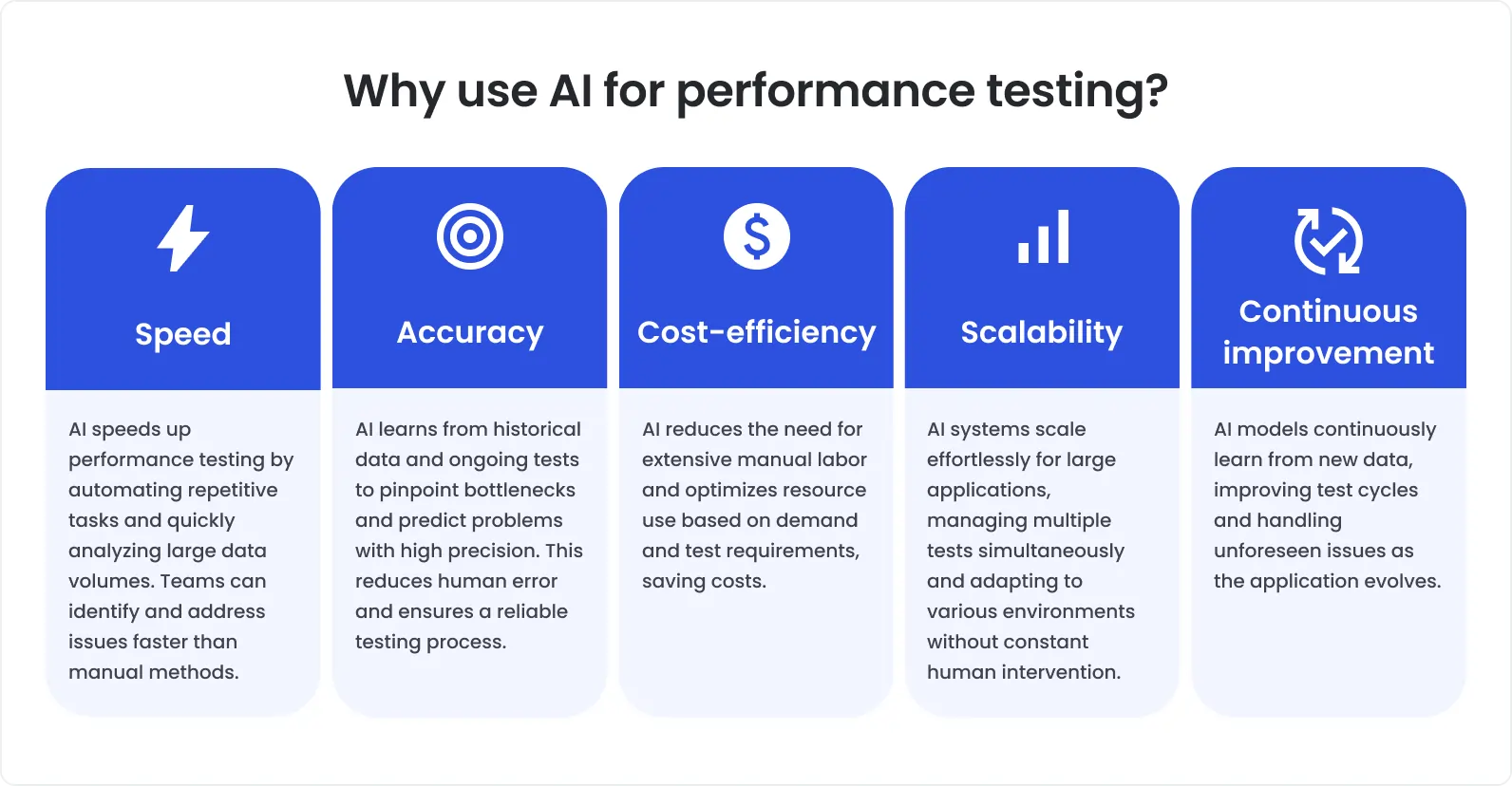

That’s where AI steps in, offering an opportunity for faster and smarter QA performance testing for a fraction of the cost. No wonder the use of AI in performance testing has increased by 150% over the past two years.

If you are not there yet, it’s time to finally move from curiosity to clarity and reveal what to look for when building or refining a performance QA strategy with AI.

The weak spots of traditional performance testing

Despite its long-standing use, traditional performance testing comes with several challenges and limitations that impact product stability as well as business metrics.

The key pain points QA teams face:

1. A lot of time and effort is required

Traditional performance testing involves manual environment setup and configuration, script writing and maintenance, and dependency management. All of these take a wealth of time and effort, slowing down the development cycle, especially in agile and DevOps solutions environments.

2. Real-world scenario simulation is challenging

Today’s software solutions offer dynamic content and diverse user interactions, which complicates the simulation of realistic load scenarios. Manual scripting struggles to cover all variations and scenarios accurately. Therefore, there may be gaps in testing that increase the risk of potential performance issues in the real world.

3. Cloud waste is the norm

Because of the lack of precision to right-size environments, teams often overprovision infrastructure just to be safe. Without real-time feedback and dynamic scaling, they might run excessive tests that spin up more instances than needed. This results in higher cloud bills with minimal ROI.

78% of the Stacklet survey respondents estimate that more than 20% of their cloud spend is waste.

4. A nightmare of analyzing huge data volumes

As you know, performance testing generates vast amounts of data, from response times to resource utilization metrics. Manual analysis of test results with the goal of extracting insights requires both expertise and time and can indeed be overwhelming.

5. Limited real-time monitoring that slows down the process

Most traditional performance testing tools don’t offer real-time monitoring, making it difficult to catch and fix issues as they arise. Instead, teams uncover critical issues only when testing is complete.

6. Scalability is always a problem

As applications grow, so do performance demands. However, simulating thousands or even millions of concurrent users is resource-intensive and pricy. Traditional setups are often unable to scale without hitting infrastructure limits.

What do these cost your business?

The shortcomings of traditional performance testing result in delayed releases, unstable systems, and poor user experience.

Delayed releases → missed market opportunities and lost competitive advantage

Haystack’s research reveals that 7 in 10 software projects don’t hit their deadlines. That causes so many problems that 89% of decision makers at US businesses – and 81% in the UK – are now concerned about the on-time software delivery.

Underperforming systems → frequent downtime and revenue loss

According to ITIC’s 2024 Hourly Cost of Downtime Survey, the average cost of a single hour of downtime exceeds $300,000 for over 90% of mid-size and large enterprises.

Unhappy users → low conversion rates and poor retention

Walmart found that for every 1-second improvement in page load time, conversions increased by 2%. Performance bottlenecks that go undetected in testing can drive users away before they even convert.

Traditional performance testing lags behind in many critical areas, calling for a smarter approach, and AI takes up the challenge.

Capabilities of AI in performance testing

Artificial intelligence has shaken things up in the quality assurance industry. When thoughtfully used, it can also streamline performance testing. This refers not only to testing speed but also to smart prediction that adds strategic value.

How AI optimizes performance testing:

AI, with its great capabilities, helps QA teams optimize different aspects of performance testing and reconsider the whole approach to its execution.

- Smarter test scenario generation

By analyzing historical usage patterns, user journeys, and traffic spikes, AI helps create realistic, dynamic load tests. This way, behavior-based simulations that reflect actual user interactions replace static, manually crafted scenarios, ensuring more efficient issue detection.

- Efficient resource allocation

Based on the past test runs and usage data, AI can predict the optimal infrastructure needed for testing. This is important as it helps automatically right-size test environments, avoiding overprovisioning and reducing cloud spend.

- Real-time anomaly detection

Throughout test execution, ML algorithms monitor metrics to detect abnormalities in latency, throughput, CPU, etc., in real time. As a result, root cause analysis is also sped up, and critical performance bottlenecks are caught early.

- Automated test data analysis

Data analysis is the forte of AI. By thoroughly analyzing logs, traces, and performance metrics, AI models detect regressions, correlations, and performance degradation. This saves teams a ton of time and effort and provides them with insights in the blink of an eye.

- Predictive analytics

Based on historical performance data analysis, AI can forecast potential performance issues before they actually occur. By spotting patterns in how resources are used, how often transactions are made, or how long it takes for systems to respond, AI-powered testing solutions alert teams about possible performance problems so they can fix them before they happen.

Read more: How AI helps predict failures during load and stress testing.

- Self-tuning test parameters

While traditional performance testing relies on predefined loads, AI, which learns from each test run, can dynamically adjust test parameters – test duration, virtual user ramp-up, failure thresholds, etc. – in response to real-time performance feedback. This ensures more accurate and adaptive test coverage, enabling teams to better understand system limits and behavior under varying levels of stress.

- Better scalability

AI can dynamically ramp up or ramp down test infrastructure across distributed systems based on real-time demand. Thanks to this approach, it’s easier to simulate large-scale traffic without hitting infrastructure limits.

All in all, AI performance testing is nothing like the traditional approach you may be used to. However, it shouldn’t be seen as a panacea for all problems.

To deliver the desired results, AI-driven performance testing requires an efficient implementation. It’s not enough just to pick one of the modern AI-powered tools. To make it work, you need:

Domain knowledge: In spite of all its capabilities, AI can’t replace human expertise. It needs to be guided by QA engineers who understand the system architecture, bottlenecks, and what good performance actually looks like.

Clean, well-labeled data: AI thrives on good input. Poor or inconsistent data leads to false insights or missed anomalies.

Strong orchestration: Seamless AI integration into CI/CD pipelines, observability tools, and infrastructure provisioning is key to realizing its full potential.

Human-in-the-loop validation: Even the smartest AI-powered testing system isn’t fully autonomous and serves just as a reliable assistant to a human. Oversight is still needed. QA engineers should review AI-generated insights and regularly refine AI models.

Tips for evaluating AI performance testing solutions like a CTO

It's not a secret that many vendors today label their performance testing solutions as AI-powered while still relying on static thresholds and if-else rules.

As a CTO, you need to understand how AI-driven performance testing solutions work under the hood, not just rely on marketing claims.

Let’s see what can help you assess the potential value of the offered AI-powered testing solutions.

Pay attention to algorithm transparency

Ask what algorithms are used in a performance testing solution and how they actually work. You should be sure that the decision-making process is clear and explainable. If an outsourcing vendor can’t explain why something is flagged or how their model adapts over time, it's a bad sign.

Check data hygiene

It’s highly advised to review how raw performance data is collected, cleaned, normalized, and fed into models. A trustworthy vendor will prioritize clear hygiene practices like de-duplication, noise filtering, and separation of test data from training data.

Look for CI/CD compatibility

AI-powered testing brings real value when it fits your delivery pipeline. Therefore, verify how well the provided solution interacts with your CI/CD ecosystem. Opt for one that seamlessly integrates with your CI/CD stack (GitHub Actions, Jenkins, GitLab CI, etc.).

Integration with observability tools is key

Any AI model is as good as the data it’s fed. Therefore, seamless integration with Prometheus, OpenTelemetry, or other APMs is a must.

Automated root cause analysis isn’t a luxury but the norm

There is a big difference between saying that latency has increased and saying what and why caused it. A solid AI-powered solution should correlate metrics across services, tie changes to specific commits or deployments, and help teams fix issues instead of just reporting them.

Assess support and customization ability

You’d want a partner, not just a system, who can tune models to your tech stack, workloads, and needs. Make sure your vendor is up to it.

Look beyond charts

CTOs aren’t interested in colorful dashboards, but look for a solution that translates test results into actionable insights, enabling them to understand what’s slowing them down, where bottlenecks are, and what steps a team should take next.

A quick look at an AI-driven performance QA pipeline

Most teams have performance testing covered in some way, but what does an AI-driven performance testing pipeline look like? A mature pipeline mirrors production complexity, learns from real system behavior, and provides deep operational insights. Below is a breakdown of what that pipeline usually includes.

1. Test design based on real usage patterns

Modern AI-powered systems integrate with APMs or traffic analyzers to extract real-world usage data, based on which, they perform the following tasks:

Session clustering: Grouping user sessions by behavior and assigning statistical weights with regard to the frequency.

Synthetic scenario generation: Instead of scripting fixed paths, ML models generate dynamic test cases, simulating diverse user flows.

Spike pattern replay: Simulating real-world surges in your tests by reproducing daily usage highs, sudden spikes during flash sales, or traffic spikes on release days.

As a result, teams don’t wonder what to test but recreate what actually happens.

2. Efficient orchestration across the stack

A modern AI performance testing pipeline tightly integrates with CI/CD, infrastructure provisioning, and observability tools. In this way, it can accurately determine when to run tests, what to target, and how much load to apply.

You can expect:

Kubernetes-based test deployment: Kubernetes or similar orchestration platforms are used to dynamically allocate resources for test environments. AI agents can spin up test runners or ephemeral environments based on triggers from the CI/CD pipeline or infrastructure requirements.

Test load shaping: On the grounds of system health or CI pipeline triggers, AI adjusts different test parameters, such as concurrency, ramp-up, and virtual users. Therefore, each test is relevant to the actual state of the system, and overprovisioning is prevented.

Automated test triggering: Integrations between observability tools and performance testing frameworks enable automatic test triggering whenever specific patterns – latency spikes or error rate increases – are detected. The system can launch targeted load tests to reproduce and diagnose emerging issues.

As a result, testing is adaptive and environment-aware, while cloud resources are used smartly.

3. Real-time monitoring and anomaly detection

In an AI-powered pipeline, metrics are collected and analyzed in real time, during test execution:

Metrics ingestion: AI-powered pipelines continuously monitor logs and metrics provided by OpenTelemetry or Prometheus. This lets teams view the system across distributed environments in real time.

Anomaly detection: With the help of clustering or statistical models, AI flags deviations in latency, error rate, and container resource usage, detecting bottlenecks before they impact end-users.

This immensely reduces the hours teams would otherwise spend manually checking and correlating logs.

4. AI-based diagnostics and optimization

AI-powered testing solutions go beyond usual test reporting:

Root cause analysis: AI models thoroughly analyze logs and telemetry data to correlate failures with code changes or external events, ensuring faster diagnostics.

Suggestions and recommendations: AI-based systems can provide SRE or QA engineers with recommendations for fixes, refactorings, or infrastructure adjustments.

Log correlation with incident data: Integration with Jira or similar incident management systems helps correlate test logs with known incidents or tickets to identify whether detected issues are regressions or already tracked problems.

Model retraining: The pipeline updates baselines for response time, throughput, and system tolerances after every test run to reduce false positives.

Failure prediction: Predictive models identify potential defects or performance regressions in the future by using historical data. Therefore, teams can address issues before they cause trouble.

But what makes AI-powered QA pipelines especially valuable is that over time, they get really smart, resolving possible issues before they arise.

Is your current approach to performance testing efficient enough? If you feel like you’re lagging behind, teaming up with a QA partner that already has the entire AI performance testing pipeline in place can be the way to go.

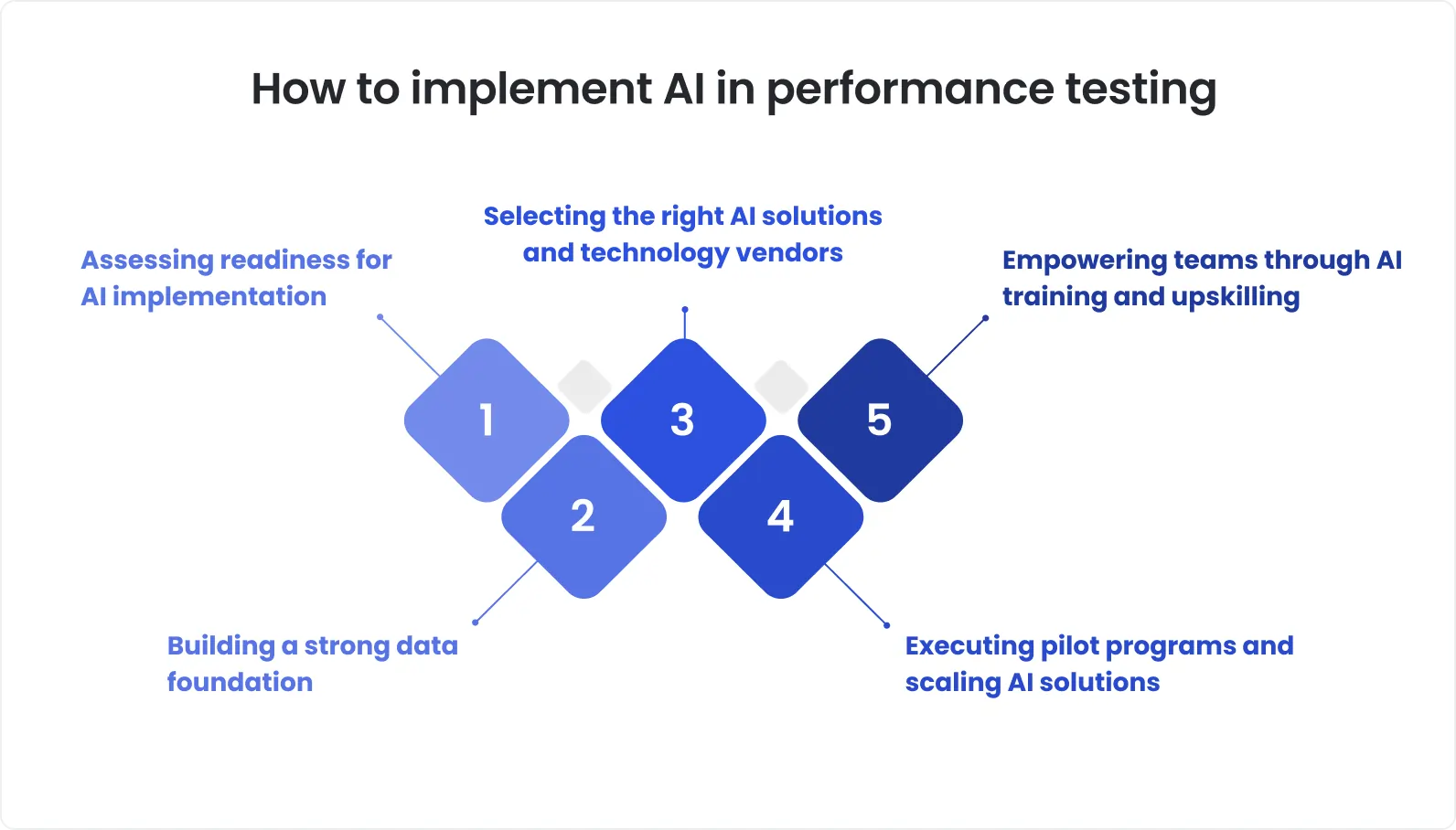

Risk-free introduction of AI in performance testing

Integrating AI into performance testing is indeed a wise move, provided it doesn’t break what already works.

If you’re new to AI performance testing, begin with a small scope, carefully track the results, and stay in control throughout the process.

To mitigate risk, consider the following:

1. Concentrate on a particular use case

Don’t try to inject AI into your entire testing pipeline at once. Instead, focus on one area where AI can bring clear value – for example, anomaly detection in test results or adaptive load scaling – and test it there.

2. Build a narrow PoC

Also, it would be safe to choose one module or microservice with known bottlenecks and apply AI-powered load analysis or anomaly detection just to that component. This approach will let you evaluate the impact without system-wide exposure.

3. Leverage shadow testing

Run your AI system in parallel with your existing stack. Let it analyze logs and metrics in real time, but don’t allow it to trigger alerts or affect pipeline decisions. This way, you’ll understand what AI is adding without disrupting production or CI/CD.

4. Always keep humans in the loop

Remember that AI tools can still get it wrong sometimes. Your senior QA engineers should manually review AI-driven insights, particularly root cause explanations, to ensure they meet expectations.

5. Team up with an experienced provider of performance testing services

Partnering with a professional QA team that has a refined AI infrastructure, like DeviQA, can help you avoid painful mistakes and disappointment. Expert guidance will help you apply AI where it truly helps and build lean and reliable performance testing.

Read more: 7 costly misconceptions about using AI in QA and software testing.

Measuring the ROI of AI-driven performance QA

For business stakeholders, seeing that the introduction of AI-enhanced performance testing has delivered tangible results is important. As a CTO, it’s a part of your job to show how exactly AI-enhanced performance testing has changed things. Yet, it’s essential to remember that the ROI shouldn’t be measured by test run speed only, but by improvements in the entire testing workflow.

4 core metrics how AI brings value to QA performance testing:

1. Engineering time saved

This metric shows if AI has taken over time-consuming and repetitive tasks like log scanning, baseline comparison, and anomaly diagnostics that used to eat up hours of manual effort. Also, it lets you understand if your QA process is becoming leaner, catching issues early instead of dealing with them after they cause problems.

2. Frequency of unplanned downtime

By tracking how often the system goes down, you can evaluate whether the AI-based approach has improved its stability. Fewer outages suggest that AI performance testing enables earlier detection of performance regressions and ensures more predictable releases.

3. Edge case coverage

This tells you if your software is actually being tested under a variety of non-standard conditions, like sudden traffic spikes, geo-specific loads, or unusual usage patterns. If your edge case coverage is going up, your users are less likely to run into surprises. Good AI-powered load models should be able to simulate real-world conditions that are hard to reproduce manually, helping to spot issues before they affect real users.

4. Reduction in false positives

A lower false positive rate leads to fewer hours spent on chasing problems that don’t exist. With the traditional approach, flaky alerts are the norm. An efficient AI solution should help filter out what matters, so your team doesn’t waste time on irrelevant issues.

Together, these metrics show if AI-enhanced performance testing addresses real inefficiencies. Also, they help business stakeholders understand what they actually care about: if there are fewer disruptions, smarter resource allocation, and better product performance.

The hybrid approach – the future of performance QA

Automation or human expertise alone doesn’t meet the needs of modern performance testing. The future lies in a hybrid approach that combines AI with the judgment of experienced QA professionals.

In such a hybrid performance QA model, AI tools monitor system behavior 24/7, catching anomalies that would likely go unnoticed in manual reviews. These observations are passed along to QA leads and client-side experts who know the product inside out, understand the business priorities, and are aware of customer expectations. This way, every flagged issue is thoroughly evaluated before action is taken.

This teamwork between AI and QA engineers ensures a more reliable testing process. While AI handles continuous data collection and in-depth analysis, people jump in to make sense of it and decide on the next steps.

It also makes the whole process more transparent. Rather than relying on vague alerts or dashboards, stakeholders get clear explanations that help them understand what’s happening and why it matters.

By blending the capabilities of artificial intelligence with human expertise, hybrid performance QA ensures faster issue detection, smarter decision-making, and more stable software.

Final CTO’s checklist

To succeed with AI-powered performance testing, make sure you are able to check all the boxes below. But even if not, chin up – there are professional QA providers like DeviQA that can handle AI performance testing end-to-end.

- Assess AI tools for performance testing

Or rely on our refined AI infrastructure. We’ve already vetted what works best and tossed out what doesn’t.

- Integrate AI performance testing into your existing pipeline

Or let our team integrate it without interrupting your workflows and deployments.

- Detect performance issues early, not after release

Or engage DeviQA experts to set up continuous monitoring and targeted diagnostics that flag issues before they cause trouble.

- Use performance data to make smart decisions, not create dashboards

Or team up with us to receive valuable insights, not a flood of charts and logs.

- Reduce false alarms

Or take advantage of our fine-tuned QA process that highlights what deserves your attention and ignores the rest.

- Test edge cases under real-world conditions

Or bring in our expertise in simulating traffic surges, global access, and weird usage patterns your users actually do.

- Balance AI capabilities with human expertise

Or try our hybrid model that blends AI’s efficiency with QA judgment to align with your business needs.

AI-enhanced performance testing requires expertise to deliver real value. Whether you need end-to-end support or just expert QA consulting, DeviQA is ready to plug in where you need us - just get in touch.