11 minutes to read

Mobile Automated Testing of the Messenger with an Encryption Algorithm

Chief Technology Officer

This article is a brief guide to what you may encounter during automated testing of a mobile application like instant messenger. In this article, we will talk about:

These are some of the challenges you may be faced with during mobile app testing of instant messenger. We are ready to share our solutions.

Project Summary

Our client developed a highly secure messenger for enterprise companies that can make calls, send text messages and broadcast messages, share files, etc. The main task was to design, develop, and integrate automated tests to the quality assurance process on the project as well as to spend testing costs more efficiently and to ensure a structural testing approach with minimum human involvement.

Background and significance

Our client had seven in-house people in the quality assurance (QA) team that were responsible for the quality of this project. They primarily performed manual testing. An in-house QA team developed ~3000 test cases to cover all messenger functionality. Manual testing took around five business days to conduct regression testing for one platform and one device. That means our client was able to deploy new build for his customers no more often than once every two months due to the large scope of test devices and platforms. However, the client's customers were not satisfied with the speed of releases. It was the right time to implement an automated testing approach because the test cases for all major functionalities were developed, UI was stable and did not require redesign. A total of four automated QA engineers were allocated to that project. The first automated QA person that was involved in the project was the QA automated lead. He worked on architecture for the automated test suite, the general approach of automated testing, and infrastructure set-up for the entire project. Following a two-week period when the QA automated lead gained a general understanding of the project and finished his work on the architecture, another three automated QAs were added to the project for automated test development. The main responsibilities of the automated QA team included automated test development, integration with third parties, reporting the bugs found, adding multithreading to speed up the automated test run, configuring the reporting system, test maintenance, code review, etc.

Project aims/goals

There were several goals to bring the automated testing into place:

Methodology / work undertaken

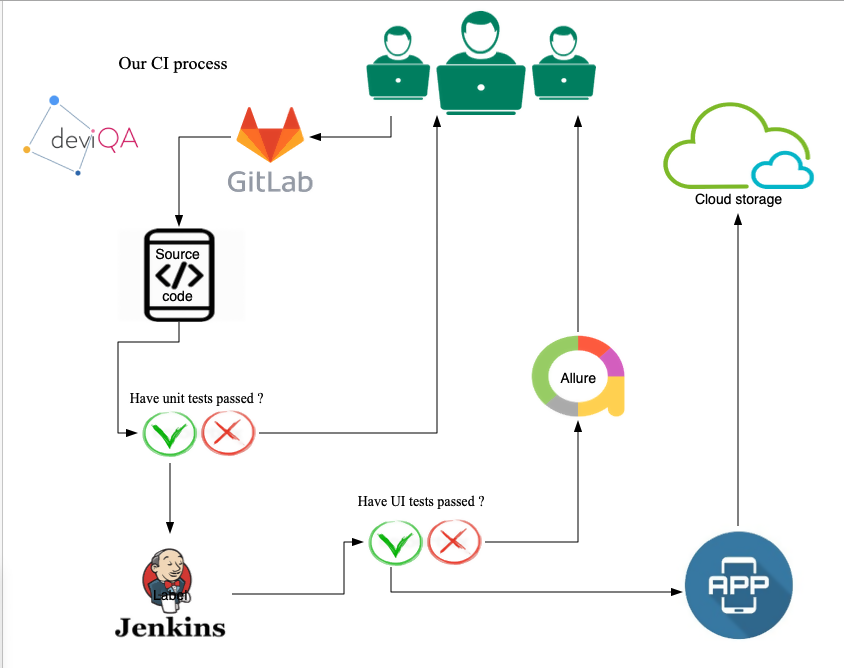

To implement the client's requirements, we decided to use the time-tested technology stack: Java + Appium + TestNG + Allure. Appium uses standard APIs, which makes it a good choice when developing tests for both Android and iOS applications. To make our test solution even more robust and effective, we provided and developed a continuous integration solution based on Jenkins. This continuous integration solution gave us the ability to fully automate delivery. Managers and engineering leads were able to get test build or bug reports in case of fails in an automatic manner. Once the developers pushed the code to release branch and the unit tests passed successfully our automation test suite was started. There was no need to push the QA engineers to make a test run or start the test suite because everything is performed in an automated manner. Moreover, it allowed us to store the statistics for test runs, manage test reports, and use third-party integrations, e.g. with Jira (tickets and team management system) and TestRail (test case management system). We used Jenkins CI because it is a flexible tool with a broad set of custom plug-ins that gives us the ability to accurately integrate any tech stack. Our CI solution worked based on the following algorithm:

For the reporting system, we chose Allure. The reports generated by this framework look very clean and modern, with a possibility of customisation and flexible settings. The report provides a great deal of useful information about the test, such as the duration, the link to the test case, the body of the test, etc. It includes all the steps and their duration, as well as screenshots from each device if the test fails. A great feature of this report is a history, whereby QA person can easily distinguish the most problematic area in the application that causes the most issues. Moreover, it allows them to obtain information such as the bugs per component, the number of flaky tests, etc. While using Allure on the CI server, the history of test runs becomes an insight into the application quality. In addition, there is information about all test re-runs after failing with screenshots and error descriptions which lets you to determine precisely where the test is unstable and requires attention.

Challenges / obstacles overcome

Execute test using at least three real devices

During the development process, one of the key challenges was to make the Appium server automatically rise to have an opportunity to connect at least three real devices in parallel. Since the main function of the application is messaging and calls, we needed to use several mobile devices to check that the message was sent from one to another (or raised conference calls, etc.) and received on another device. To achieve this aim, we decided to use a multi-thread architecture that runs numerous instances of Appium on a test farm at the same moment. In addition, we developed the controller that synchronised operations between devices. Once the chain of operations finished on one device, the controller switched to another device to perform another bunch of operations.

Adding a new device or replacing an existing one

One of the key issues was that we needed to have the ability to run tests on different devices in our automated test suite with minimal effort. As we used a cloud provider to obtain devices for a test run, we did not know the device identification before we started the tests. We solved this problem by using a cloud provider API to obtain information about the available pool of devices. Following this, we parsed it and stored it in a separate config file that is parsed in a loop; thus, to add or remove a device, you only need to add or delete a couple of lines from the config. All the necessary capacities for initialisation of the Appium driver (a driver is an object that manages all interactions with the device) are taken from config: platform version, WDA port, UDID, etc. Each driver instance that runs a separate device is saved to the driver manager and is available during test execution.

Test logging

Another problem was logging. Ultimately, we had several drivers; each of them was associated with a separate device. We had to solve the problem of how to ensure a readable and understandable log. The log should be easy to use and check. We decided to use annotations and a special logger class that was developed by our test engineers that set individual logs for each driver. This allowed us to significantly improve the structure of the logs and store them from different devices in one place. Moreover, every non-tech person could easily understand all the information concerning the behaviour of the tests.

A restart of failed tests

As everybody knows, the stability of a UI test depends on different factors: server delays, dynamic content, infrastructure issues, etc. We needed to predict all these cases, but despite all our efforts, some of our tests could be flaky. Certainly, we needed to figure out the root cause, but as a short-term solution it was decided to set up the algorithm for restarting the failed tests. For this purpose, we used a transformer of test annotations. Also using the annotations, we divided tests into different categories, which allowed our client to run tests depending on the functionality that needs to be checked. For example, if developers worked on some feature (e.g., payments), we can run only the scenarios related to that payment feature and omit time spent on running the whole regression scope. Therefore, here, we resolved two issues: we improved the stability of the tests and made them more configurable.

Run several threads in several parallels

We had a strict requirement - the test run should take no longer than 48 hours, and that's why we had to speed up the run of our automated tests by using several threads in parallel. It allowed us to simultaneously run multiple tests on multiple devices. However, the main problem while running several threads was that the tests which use several devices should interact with each other. We had to develop our own thread manager that managed threads inside threads. To simplify our logic for a full test run, we had to use a predefined general quantity of threads. Based on that number, thread managers locked that amount of threads and awaited a response from testing for how many devices it will use to execute it. After obtaining the number of devices to the entire test, the thread manager spawns child threads based on the number of devices required by the test.

Results

A massive amount of work was performed. During the course of five months, 3,200 test cases were covered by 1,730 automated tests. Some of the test cases were combined in one automated test because it did not make sense to cover test cases 1-to-1 to automated tests in some specific cases. The test run was divided into 23 threads. A total of 46 real devices were used to run tests in the cloud. It took 46 hours and 38 minutes to have the tests completed, which means that the client can deploy new build for his customers every two days. Unfortunately, two QA persons were not sufficient to check new features; for this reason, management decided to leave three manual QA engineers for testing new features only. The average yearly salary in Switzerland for a software engineer in the test is around 85,200 CHF. Our solution saved about 340,800 CHF for the company per year. Three out of four automated test engineers left the project, and one automated QA person was dedicated to maintain automated tests and cover new functionality after we completed the initial scope.

Evaluation

We are proud that we achieved the main goals for the entire project. We built the robust automated test suite with a complex multithreading architecture and integrated it to a continuous delivery process. Using our integrated automated testing process, our client can significantly speed up delivery time for his customers, can gain a full picture of the quality of the application build in two days, can minimise manual testing effort, has reduced his manual QA team size by a factor of two and has saved a considerable amount of his funds. We had an idea to simplify our testing by using API calls to trigger actions when two sides required some tests such as making a call, sending broadcast messages, etc., but our client declined that idea and asked us to implement multi-device interaction in the form that end-users will use it.