Most patient communication failures are never logged as bugs.

They surface as missed appointments, incomplete follow-ups, and declining response rates. In outpatient care, 20–30% of appointments are missed, often due to breakdowns in reminders and follow-up communication.

Based on our work with CipherHealth, a patient engagement platform, this article shares where multi-channel communication breaks, why QA teams often miss it, and practical lessons on how to test patient journeys across web, mobile, SMS, and voice as one system.

Why multi-channel patient communication breaks easily

Multi-channel patient communication systems tend to fail at coordination, not execution, a core challenge in healthcare communication software testing.

Individually, web portals, mobile apps, SMS gateways, and voice systems are usually stable and well-tested. The breakdown occurs where these components are expected to work together as a continuous, stateful workflow, something most QA setups don’t explicitly validate.

From real-world patient engagement platforms, several structural weaknesses consistently emerge:

Channel orchestration is implicit, not enforced. Business logic assumes correct sequencing between channels, but rarely verifies it under real timing and retry conditions.

State propagation is fragile. Patient context may exist in one channel but fail to persist when the journey transitions (for example, from SMS to browser or from mobile interaction to voice follow-up).

Asynchronous behavior is under-tested. Delays, retries, partial responses, and race conditions between systems create edge cases that are not covered by linear test scenarios.

Operational visibility lags behind reality. Patient interactions can succeed on the surface while downstream systems (analytics, dashboards, care team views) reflect incomplete or inconsistent data.

These failures don’t surface as defects during release validation. They surface operationally, as broken workflows discovered through analytics anomalies, support tickets, or post-release audits.

What needs to be tested in patient communication platforms

Patient communication platforms must be tested as continuous workflows, the foundation of effective multi-channel patient communication testing.

In our work with CipherHealth, patient journeys routinely crossed web portals, mobile interfaces, SMS outreach, and automated voice follow-ups. Early QA efforts focused on validating each channel independently, but recurring issues appeared only once patients moved between them.

The areas that ultimately required explicit test coverage were:

1. Web and mobile patient flows

Patient surveys and interactions had to behave consistently across devices, including interrupted sessions, partial completions, and re-entry through different channels without losing context.

2. SMS links and callbacks

Links embedded in reminders needed to preserve patient state, remain valid across devices, and correctly resume workflows. Edge cases, delayed openings, expired links, retries, were frequent sources of silent failures.

3. Voice / IVR triggers and fallbacks

Automated calls were triggered conditionally, based on patient behavior in earlier channels. QA validation had to confirm correct timing, suppression logic, and fallback behavior when SMS or web interactions remained incomplete.

4. Data consistency across all channels

Patient responses captured in any channel had to be reflected reliably in operational dashboards and reporting systems used by care teams. Even small delays or mismatches created downstream confusion.

The key lesson from this case was structural:

Patient communication platforms operate as a single stateful system, even though they’re delivered through multiple channels. QA must validate that shared system behavior, or orchestration failures will escape detection and reach production unnoticed.

Common QA gaps in multi-channel healthcare apps

Most multi-channel healthcare platforms don’t fail because QA is missing, they fail because QA is misaligned with how the system actually behaves in production.

The gaps are consistent across patient engagement products.

Channels tested in isolation

SMS sends, web loads, calls trigger

Journeys fail when patients move between channels

Broken handoffs

Happy-path redirects

SMS → web or interaction → voice follow-ups lose context

Timing & state-sync issues

Immediate responses

Delays, retries, and partial completions desync systems

Post-release-only bugs

Builds look “green”

Failures surface weeks later in analytics, not logs

A few field observations from real patient engagement systems:

Most orchestration defects are time-based, not functional. They only appear when hours or days pass between interactions.

Retries and fallbacks are rarely tested, yet they execute more often than primary paths in real patient behavior.

Dashboards can lag behind reality, showing incomplete data even when patient actions technically succeeded.

Channel providers behave differently at scale, exposing issues that never appear in QA environments.

In the CipherHealth case, these gaps surfaced only as patient volume and workflow complexity increased. Individual components remained stable, but cross-channel behavior degraded quietly over time.

The core problem is pattern-based:

QA validates correctness at a moment in time, while patient communication platforms must remain correct across channels and over time.

Why manual QA and smoke tests aren’t enough

Manual QA and smoke tests in healthcare SaaS QA are designed to answer a narrow question: does the system work right now?

Multi-channel patient communication platforms require a different question: will the system still behave correctly after users interact across channels and time?

The mismatch shows up quickly when release frequency increases.

Patient engagement platforms often ship weekly or biweekly updates that affect messaging logic, timing rules, or workflow conditions. Each release may technically be small, but its impact propagates across web, mobile, SMS, and voice systems. Manual validation cannot realistically re-cover those cross-channel permutations on every deployment.

Smoke tests fail for structural reasons:

They validate availability, not orchestration

They confirm primary flows, not fallbacks and retries

They execute in minutes, while real patient journeys unfold over hours or days

Manual QA is further limited by human behavior constraints. Testers tend to follow expected paths, reset test data frequently, and complete flows in a single session. Real patients interrupt journeys, switch devices, ignore messages, return later, or trigger follow-ups asynchronously.

In the CipherHealth case, many high-impact issues passed manual regression and smoke validation because the system behaved correctly in short, linear test windows. Failures emerged only after release, when workflows executed over time and across channels at scale.

For multi-channel healthcare platforms, manual QA is useful for surface validation, but it is structurally incapable of proving system reliability. Without automation and system-level tests, cross-channel failures are not missed tests; they are untestable scenarios by hand.

What a scalable QA approach looks like

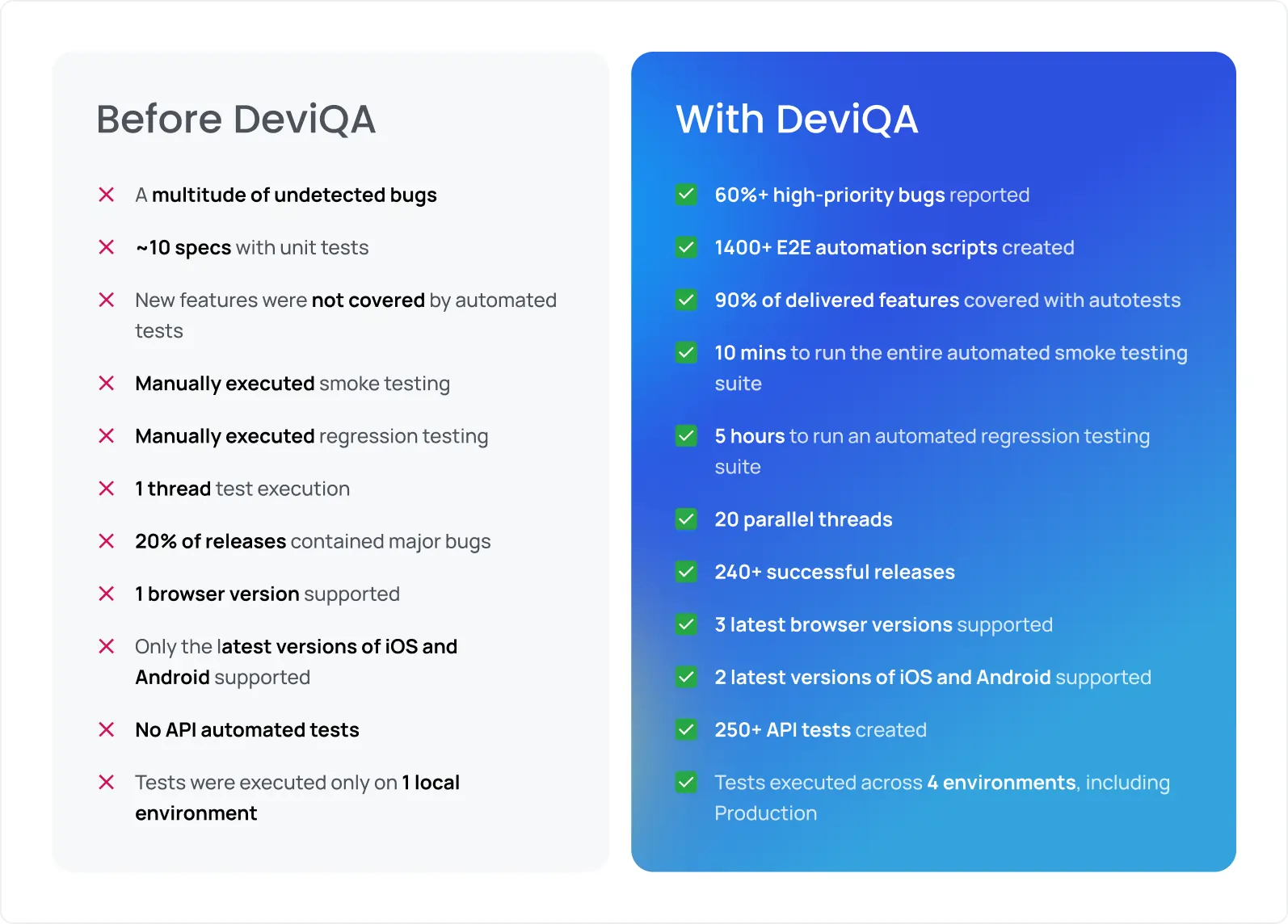

Scalable QA for patient communication platforms means shifting the unit of validation, from features to full patient journeys. In the CipherHealth case, this shift delivered measurable impact.

How we scaled QA, and what it produced

1. End-to-end, cross-channel coverage

We built more than 1,400 automated web scenarios and 100+ mobile scenarios to cover critical user flows across web and mobile clients. Those E2E scripts tested full journeys, from outreach triggers through SMS/web/voice follow-ups to dashboard updates, ensuring orchestration worked end-to-end.

2. API-level validation for workflows and data integrity

Alongside UI tests, we added 250+ automated API tests, covering backend logic, state transitions, retry and fallback mechanisms, and data propagation consistency. This API layer became the backbone for verifying asynchronous flows, error handling, and inter-channel handoffs that UI-only tests tend to miss.

3. CI-integrated automation with parallel execution

We configured automated test suites to run across up to 20 parallel threads, cutting smoke-test execution time to 10 minutes, and full regression runs to about 5 hours.

As a result, over 240 successful releases without major cross-channel regressions.

This structured approach allowed the team to deliver frequent updates and new features while keeping patient communication flows stable. Cross-channel orchestration bugs stopped being “occasional surprises” and became a thing of the past.

The real outcome of proper multi-channel QA

When multi-channel QA is in place, the impact is practical and visible:

Fewer missed or incomplete patient communications

Stable, predictable releases without slowing delivery

Cross-channel flows that preserve patient context

Operational dashboards teams can rely on

Less manual work to correct post-release issues

Over time, this creates trust, the system behaves consistently across web, mobile, SMS, and voice, even as release velocity increases.

If your patient communication platform spans multiple channels, a focused QA review can quickly show where journeys are breaking and how to fix them.